2 Results

2.1 Overview

The following sections contain the main results reported in the paper.

The results are shown next to the R code that produced them.

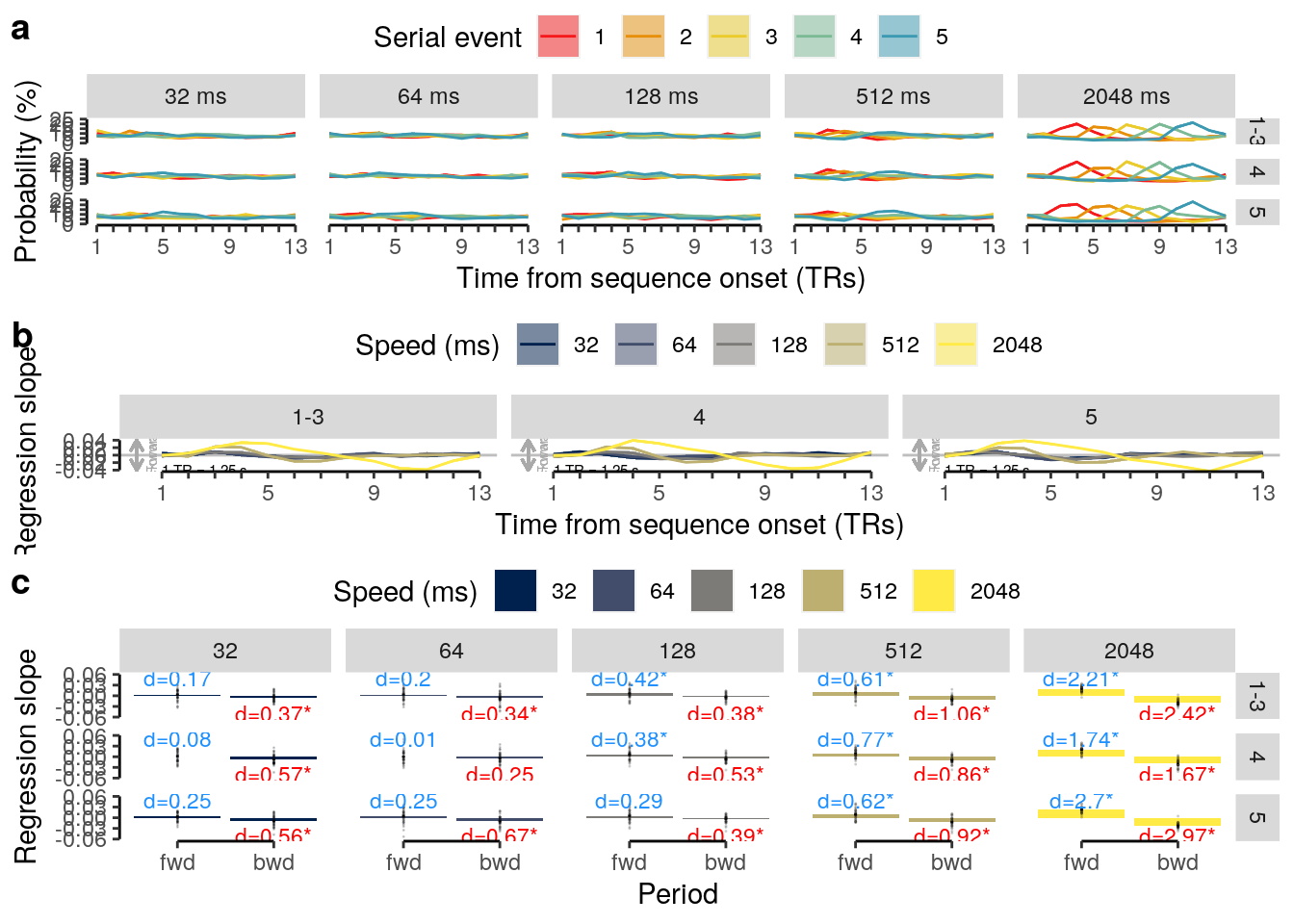

Specifically, all code is written in RMarkdown which allows to combine analysis code with regular text.

The rendered RMarkdown files you see here, were generated automatically based on data stored in the relevant data sets listed above using continuous integration in the associated GitLab repository.

This means that upon any change to the analysis code, the relevant data is retrieved from the relevant sub-directories and all analyses are re-generated.

Please see the following sections for details on the computational environment and the continuous integration pipeline.

2.1.0.1 Computational environment

Below we show the version information about R, the operating system (OS) and attached or loaded R packages using sessionInfo()

## R version 4.0.3 (2020-10-10)

## Platform: x86_64-pc-linux-gnu (64-bit)

## Running under: Debian GNU/Linux bullseye/sid

##

## Matrix products: default

## BLAS: /usr/lib/x86_64-linux-gnu/openblas-pthread/libblas.so.3

## LAPACK: /usr/lib/x86_64-linux-gnu/openblas-pthread/libopenblasp-r0.3.10.so

##

## locale:

## [1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

## [3] LC_TIME=en_US.UTF-8 LC_COLLATE=en_US.UTF-8

## [5] LC_MONETARY=en_US.UTF-8 LC_MESSAGES=en_US.UTF-8

## [7] LC_PAPER=en_US.UTF-8 LC_NAME=C

## [9] LC_ADDRESS=C LC_TELEPHONE=C

## [11] LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## loaded via a namespace (and not attached):

## [1] compiler_4.0.3 magrittr_1.5 bookdown_0.21 htmltools_0.5.0

## [5] tools_4.0.3 rstudioapi_0.11 yaml_2.2.1 stringi_1.5.3

## [9] rmarkdown_2.5 knitr_1.30 stringr_1.4.0 digest_0.6.27

## [13] xfun_0.18 rlang_0.4.8 evaluate_0.142.1.0.2 Continuous integration

Below we show the continuous integration script that was used to automatically generate this project website. This is made possible by using thee great tools DataLad and bookdown.

stages:

- retrieve

- publish

datalad:

stage: retrieve

image:

name: registry.git.mpib-berlin.mpg.de/wittkuhn/highspeed/datalad:0.13.5

entrypoint: [""]

script:

# rclone configurations:

- rclone config create highspeed-bids seafile url https://keeper.mpdl.mpg.de/ user wittkuhn@mpib-berlin.mpg.de library highspeed-bids pass $CI_KEEPER_PASS

- rclone config create highspeed-analysis seafile url https://keeper.mpdl.mpg.de/ user wittkuhn@mpib-berlin.mpg.de library highspeed-analysis pass $CI_KEEPER_PASS

#- rclone config create highspeed-fmriprep seafile url https://keeper.mpdl.mpg.de/ user wittkuhn@mpib-berlin.mpg.de library highspeed-fmriprep pass $CI_KEEPER_PASS

- rclone config create highspeed-mriqc seafile url https://keeper.mpdl.mpg.de/ user wittkuhn@mpib-berlin.mpg.de library highspeed-mriqc pass $CI_KEEPER_PASS

- rclone config create highspeed-masks seafile url https://keeper.mpdl.mpg.de/ user wittkuhn@mpib-berlin.mpg.de library highspeed-masks pass $CI_KEEPER_PASS

- rclone config create highspeed-glm seafile url https://keeper.mpdl.mpg.de/ user wittkuhn@mpib-berlin.mpg.de library highspeed-glm pass $CI_KEEPER_PASS

# git configuration:

- git config --global user.name "GitLab CI"

- git config --global user.email "ci@git.mpib-berlin.mpg.de"

# remove and install all datasets:

- datalad remove --dataset . bibliography

- datalad clone --dataset . https://github.com/lnnrtwttkhn/bibliography.git

#- datalad remove --dataset . highspeed-analysis

- rm -rf highspeed-analysis

- datalad install --dataset . -r https://github.com/lnnrtwttkhn/highspeed-analysis.git

- datalad remove --dataset . highspeed-bids

- datalad clone --dataset . https://github.com/lnnrtwttkhn/highspeed-bids.git

- datalad remove --dataset . highspeed-fmriprep

- datalad clone --dataset . https://github.com/lnnrtwttkhn/highspeed-fmriprep.git

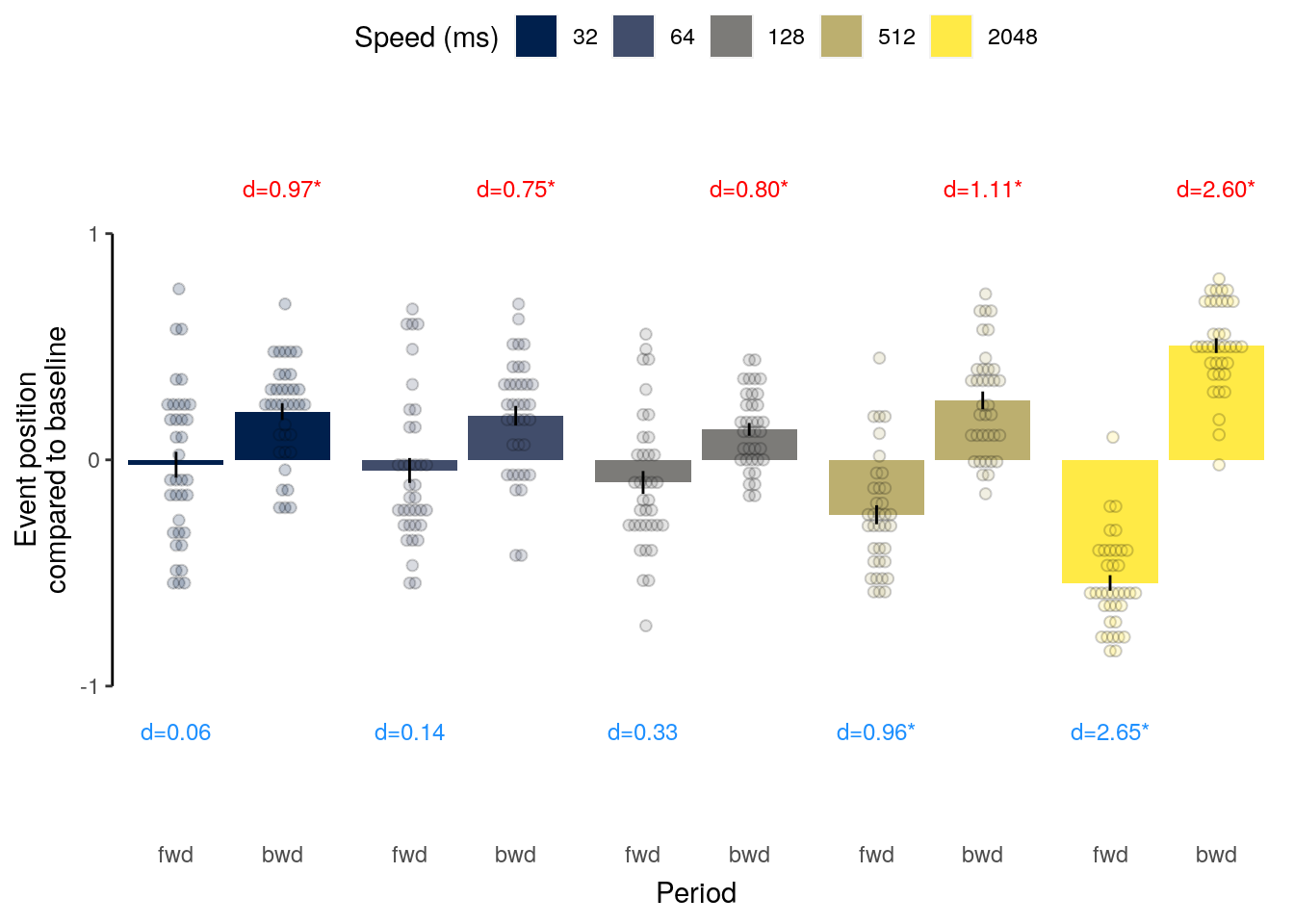

- datalad remove --dataset . highspeed-mriqc

- datalad clone --dataset . https://github.com/lnnrtwttkhn/highspeed-mriqc.git

- datalad remove --dataset . highspeed-masks

- datalad clone --dataset . https://github.com/lnnrtwttkhn/highspeed-masks.git

- datalad remove --dataset . highspeed-glm

- datalad clone --dataset . https://github.com/lnnrtwttkhn/highspeed-glm.git

- datalad remove --dataset . highspeed-decoding

- datalad clone --dataset . https://github.com/lnnrtwttkhn/highspeed-decoding.git

# add gin siblings for all datasets:

- datalad siblings add --dataset highspeed-bids -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-bids

- datalad siblings add --dataset highspeed-analysis -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-analysis

- datalad siblings add --dataset highspeed-analysis/data/bids -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-bids

- datalad siblings add --dataset highspeed-analysis/data/decoding -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-decoding

#- datalad siblings add --dataset highspeed-fmriprep -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-fmriprep

- datalad siblings add --dataset highspeed-mriqc -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-mriqc

- datalad siblings add --dataset highspeed-masks -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-masks

- datalad siblings add --dataset highspeed-glm -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-glm

- datalad siblings add --dataset highspeed-decoding -s gin --url https://gin.g-node.org/lnnrtwttkhn/highspeed-decoding

# enable keeper siblings for all datasets:

- datalad siblings --dataset highspeed-analysis enable --name keeper

- datalad siblings --dataset highspeed-bids enable --name keeper

- datalad siblings --dataset highspeed-analysis/data/bids enable --name keeper

#- datalad siblings --dataset highspeed-fmriprep enable --name keeper

- datalad siblings --dataset highspeed-mriqc enable --name keeper

- datalad siblings --dataset highspeed-masks enable --name keeper

- datalad siblings --dataset highspeed-glm enable --name keeper

# configure gin and keeper siblings for all datasets:

- datalad siblings --dataset highspeed-bids configure --name origin --publish-depends gin --publish-depends keeper

- datalad siblings --dataset highspeed-analysis configure --name origin --publish-depends gin

- datalad siblings --dataset highspeed-analysis/data/bids configure --name origin --publish-depends gin --publish-depends keeper

#- datalad siblings --dataset highspeed-fmriprep configure --name origin --publish-depends gin --publish-depends keeper

- datalad siblings --dataset highspeed-mriqc configure --name origin --publish-depends gin --publish-depends keeper

- datalad siblings --dataset highspeed-masks configure --name origin --publish-depends gin --publish-depends keeper

- datalad siblings --dataset highspeed-glm configure --name origin --publish-depends gin --publish-depends keeper

- datalad siblings --dataset highspeed-decoding configure --name origin --publish-depends gin

- datalad siblings --dataset highspeed-analysis/data/decoding configure --name origin --publish-depends gin

# get data

- datalad get highspeed-analysis/data/bids/participants.tsv

- datalad get highspeed-analysis/data/bids/sub-*/ses-*/func/*events.tsv || true

- datalad get highspeed-analysis/data/bids/sub-*/ses-*/func/*events.tsv

- datalad get highspeed-analysis/data/decoding/decoding/sub-*/data/*_decoding.csv || true

- datalad get highspeed-analysis/data/decoding/decoding/sub-*/data/*_decoding.csv

- datalad get highspeed-analysis/data/decoding/decoding/*/data/*thresholding.csv || true

- datalad get highspeed-analysis/data/decoding/decoding/*/data/*thresholding.csv

- datalad get highspeed-analysis/data/tmp/dt_pred_conc_slope.Rdata

artifacts:

paths:

- bibliography/code/bibliography.bib

- highspeed-bids/code

- highspeed-analysis/code

- highspeed-analysis/figures

- highspeed-analysis/sourcedata

- highspeed-analysis/data/bids/sub-*/ses-*/func/*events.tsv

- highspeed-analysis/data/decoding/decoding/sub-*/data/*_decoding.csv

- highspeed-analysis/data/decoding/decoding/sub-*/data/*_thresholding.csv

- highspeed-analysis/data/tmp

- highspeed-fmriprep/code

- highspeed-mriqc/code

- highspeed-glm/code

- highspeed-decoding/code

expire_in: 1 hour

only:

- master

pages:

stage: publish

image: registry.git.mpib-berlin.mpg.de/wittkuhn/highspeed/bookdown:latest

script:

- Rscript -e "options(bookdown.render.file_scope = FALSE); bookdown::render_book('index.Rmd', 'all', output_dir = 'public')"

artifacts:

paths:

- public

- highspeed-analysis/figures

- highspeed-analysis/sourcedata

only:

- master2.2 Behavior

2.2.1 Initialization

2.2.1.1 Load data and files

We set the paths and source the basic setup script:

knitr::opts_chunk$set(echo = TRUE)

# find the path to the root of this project:

if (!requireNamespace("here")) install.packages("here")

if ( basename(here::here()) == "highspeed" ) {

path_root = here::here("highspeed-analysis")

} else {

path_root = here::here()

}

# source all relevant functions from the setup R script:

source(file.path(path_root, "code", "highspeed-analysis-setup.R"))2.2.1.2 Signal-detection labeling

We assign labels from signal detection theory that will be used in one of the analyses below:

# denotes misses (key was not pressed and stimulus was upside-down):

dt_events$sdt_type[

dt_events$key_down == 0 & dt_events$stim_orient == 180] <- "miss"

# denotes hits (key was pressed and stimulus was upside-down):

dt_events$sdt_type[

dt_events$key_down == 1 & dt_events$stim_orient == 180] <- "hit"

# denotes correct rejection (key was not pressed and stimulus was upright):

dt_events$sdt_type[

dt_events$key_down == 0 & dt_events$stim_orient == 0] <- "correct rejection"

# denotes false alarms (key was pressed and stimulus was upright):

dt_events$sdt_type[

dt_events$key_down == 1 & dt_events$stim_orient == 0] <- "false alarm"2.2.2 Stimulus timings

We calculate the differences between consecutive stimulus onsets:

dt_events %>%

# get duration of stimuli by calculating differences between consecutive onsets:

.[, duration_check := shift(onset, type = "lead") - onset,

by = .(subject, run_study)] %>%

# get the difference between the expected and actual stimulus duration:

.[, duration_diff := duration_check - duration, by = .(subject, run_study)] %>%

# for each condition and trial check participants' responses:

.[, by = .(subject, condition, trial), ":=" (

# for each trial check if a key has been pressed:

trial_key_down = ifelse(any(key_down == 1, na.rm = TRUE), 1, 0),

# for each trial check if the participant was accurate:

trial_accuracy = ifelse(any(accuracy == 1, na.rm = TRUE), 1, 0)

)] %>%

.[, trial_type := factor(trial_type, levels = rev(unique(trial_type)))]timings_summary = dt_events %>%

filter(condition %in% c("sequence", "repetition") & trial_type == "interval") %>%

setDT(.) %>%

.[, by = .(subject, condition, trial_type), {

results = t.test(duration_diff, mu = 0.001, alternative = "two.sided")

list(

mean = mean(duration_diff, na.rm = TRUE),

sd = sd(duration_diff, na.rm = TRUE),

min = min(duration_diff, na.rm = TRUE),

max = max(duration_diff, na.rm = TRUE),

num = .N,

tvalue = results$statistic,

df = results$parameter,

pvalue = results$p.value,

pvalue_round = round_pvalues(results$p.value)

)

}] %>%

.[, trial_type := factor(trial_type, levels = rev(unique(trial_type)))] %>%

setorder(., condition, trial_type)

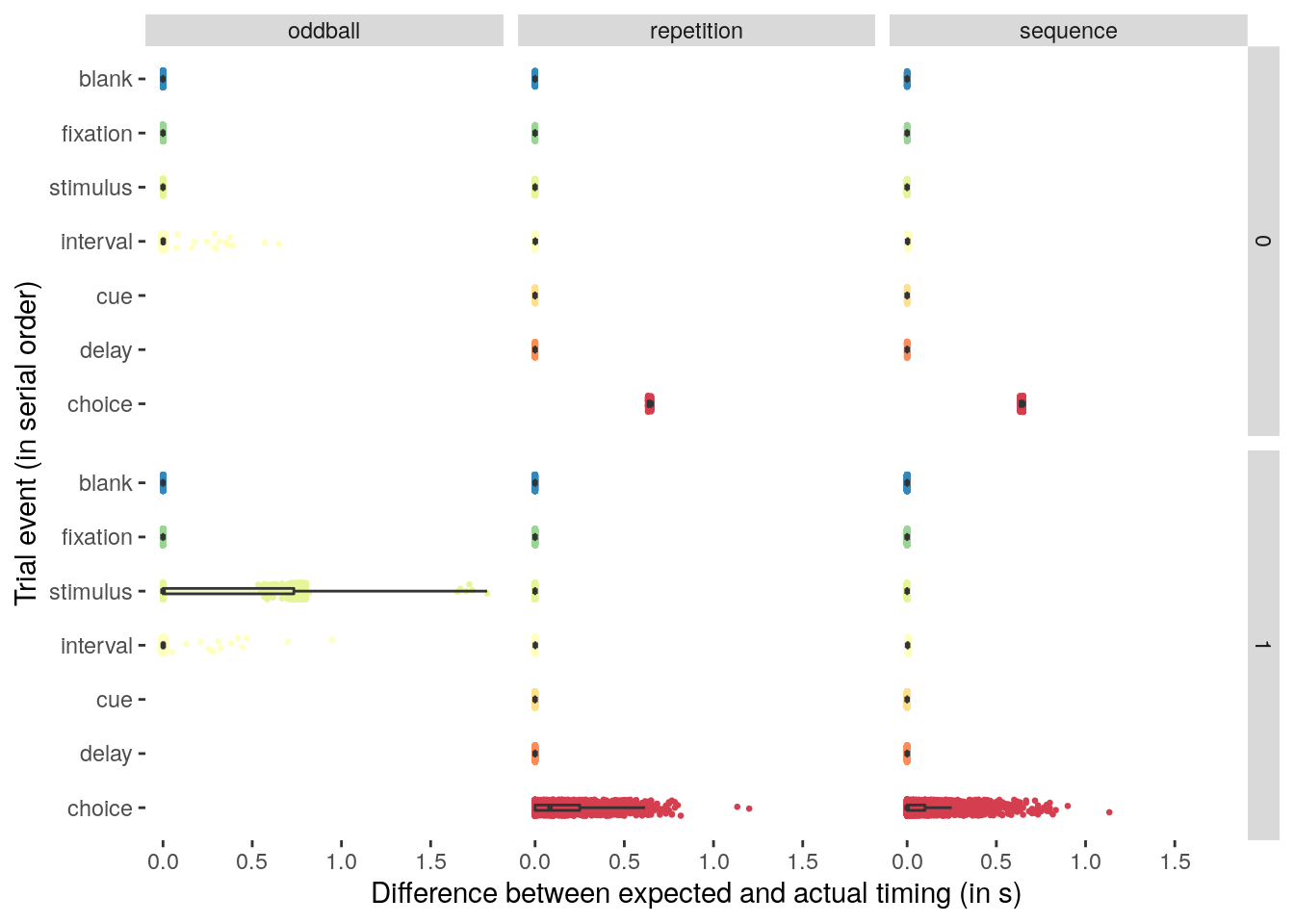

rmarkdown::paged_table(timings_summary)We plot the differences in expected versus actual timings of individual stimuli in the behavioral task:

ggplot(data = dt_events, aes(

y = as.numeric(duration_diff),

x = as.factor(trial_type),

fill = as.factor(trial_type)), na.rm = TRUE) +

facet_grid(vars(as.factor(trial_key_down)), vars(as.factor(condition))) +

geom_point(

aes(y = as.numeric(duration_diff), color = as.factor(trial_type)),

position = position_jitter(width = .15), size = .5, alpha = 1, na.rm = TRUE) +

geom_boxplot(width = .1, outlier.shape = NA, alpha = 0.5, na.rm = TRUE) +

scale_color_brewer(palette = "Spectral") +

scale_fill_brewer(palette = "Spectral") +

#coord_capped_flip(left = "both", bottom = "both", expand = TRUE) +

coord_flip() +

theme(legend.position = "none") +

xlab("Trial event (in serial order)") +

ylab("Difference between expected and actual timing (in s)") +

theme(strip.text = element_text(margin = margin(unit(c(t = 2, r = 2, b = 2, l = 2), "pt")))) +

theme(legend.position = "none") +

theme(panel.background = element_blank())

We check the timing of the inter-trial interval on oddball trials:

dt_odd_iti_mean = dt_events %>%

# filter for the stimulus intervals on oddball trials:

filter(condition == "oddball" & trial_type == "interval") %>%

setDT(.) %>%

# calculate the mean duration of the oddball intervals for each participant:

.[, by = .(subject), .(

mean_duration = mean(duration, na.rm = TRUE),

num_trials = .N

)] %>%

verify(num_trials == 600)

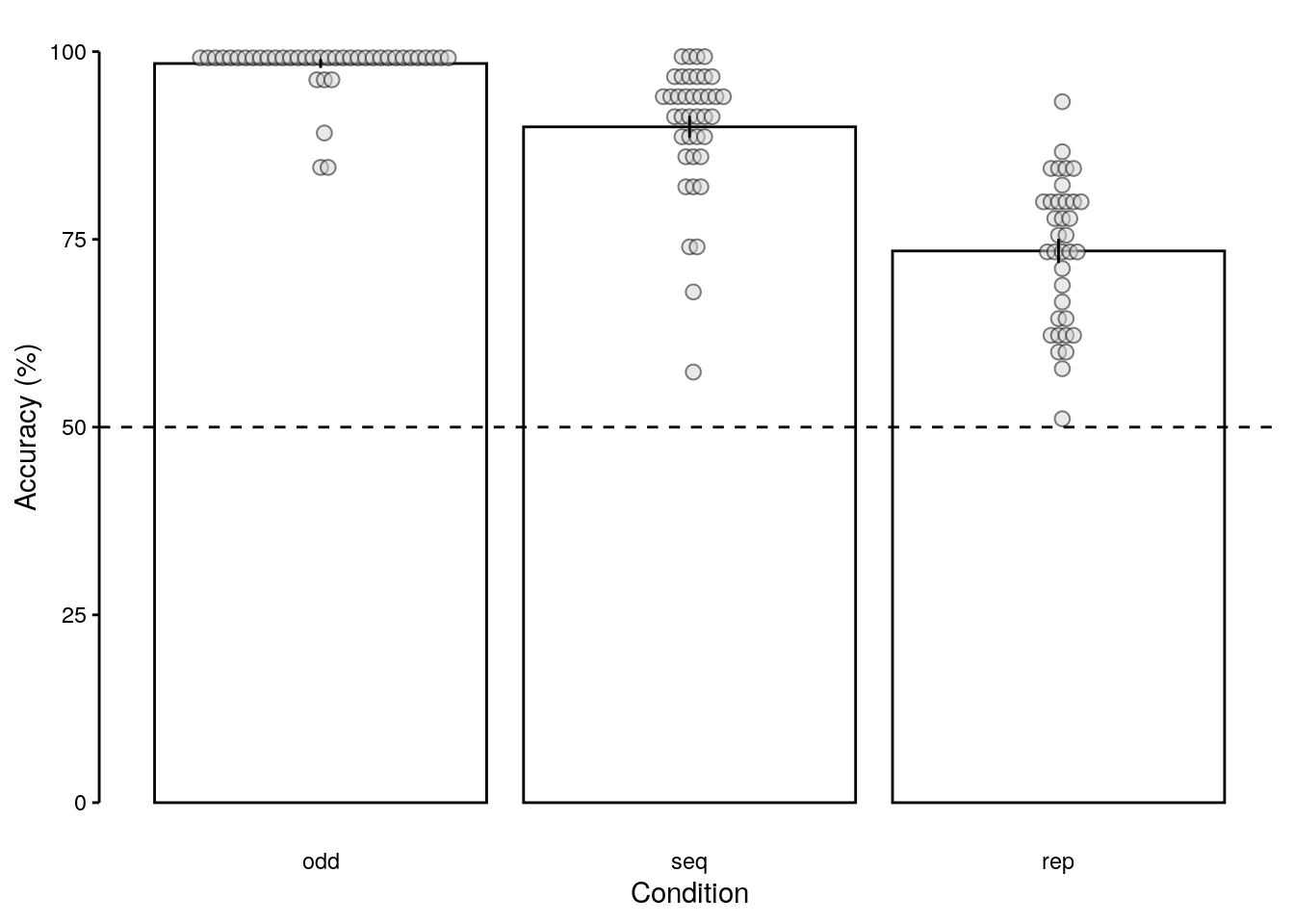

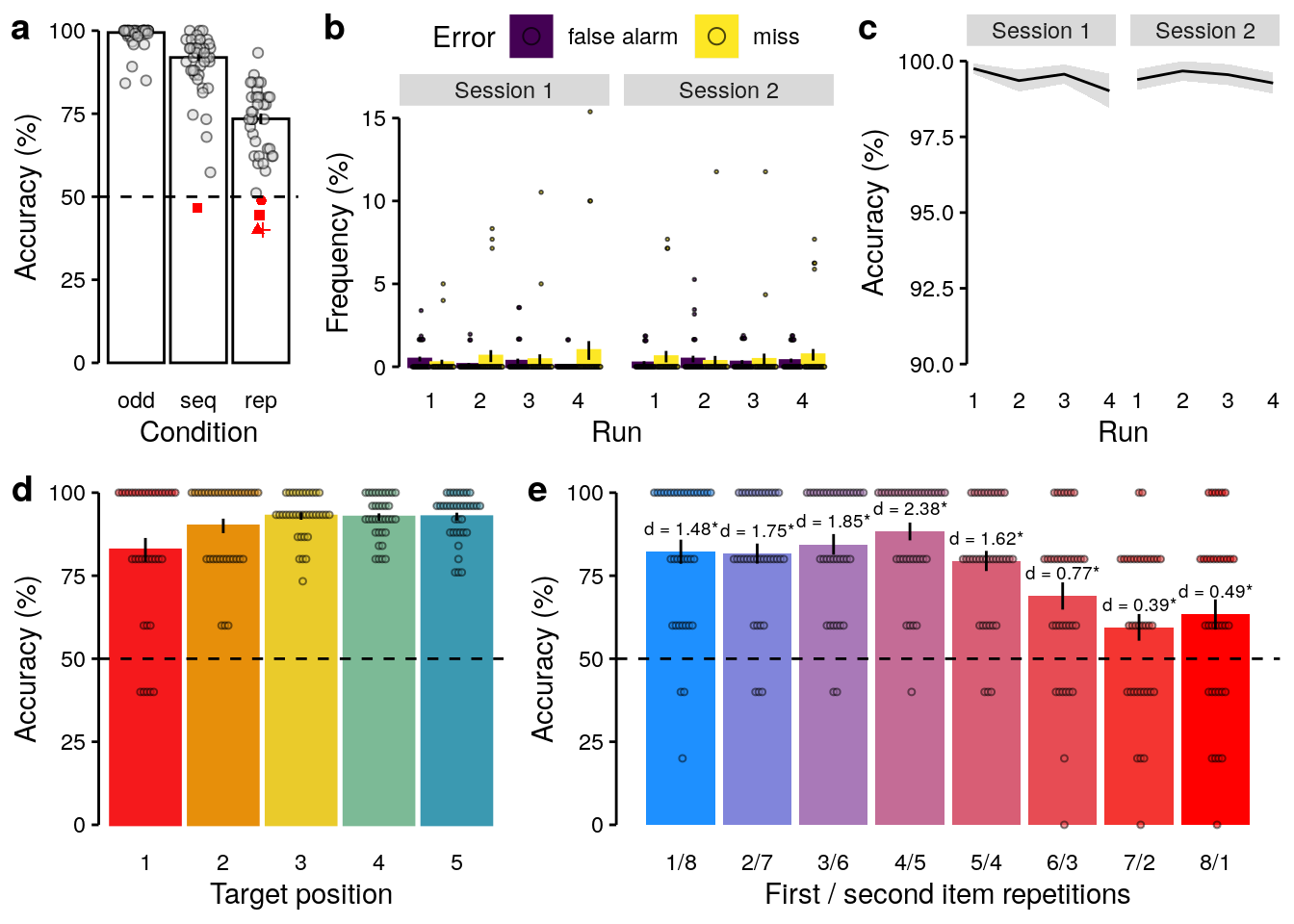

rmarkdown::paged_table(head(dt_odd_iti_mean))2.2.3 Behavioral performance

2.2.3.1 Mean accuracy

We calculate the mean behavioral accuracy across all trials of all three task conditions (slow, sequence, and repetition trials):

# behavioral chance level is at 50%:

chance_level = 50

dt_acc = dt_events %>%

# filter out all events that are not related to a participants' response:

filter(!is.nan(accuracy)) %>%

# filter for only upside down stimuli on slow trials:

filter(!(condition == "oddball" & stim_orient == 0)) %>%

setDT(.) %>%

# check if the number of trials matches for every subject:

verify(.[(condition == "oddball"), by = .(subject), .(

num_trials = .N)]$num_trials == 120) %>%

verify(.[(condition == "sequence"), by = .(subject), .(

num_trials = .N)]$num_trials == 75) %>%

verify(.[(condition == "repetition"), by = .(subject), .(

num_trials = .N)]$num_trials == 45) %>%

# calculate the average accuracy for each participant and condition:

.[, by = .(subject, condition), .(

mean_accuracy = mean(accuracy, na.rm = TRUE) * 100,

num_trials = .N)] %>%

# check if the accuracy values are between 0 and 100:

assert(within_bounds(lower.bound = 0, upper.bound = 100), mean_accuracy) %>%

# create new variable that specifies excluded participants:

mutate(exclude = ifelse(mean_accuracy < chance_level, "yes", "no")) %>%

# create a short name for the conditions:

mutate(condition_short = substr(condition, start = 1, stop = 3)) %>%

# reorder the condition factor in descending order of accuracy:

transform(condition_short = fct_reorder(

condition_short, mean_accuracy, .desc = TRUE))

rmarkdown::paged_table(dt_acc)We create a list of participants that will be excluded because their performance is below the 50% chance level in either or both sequence and repetition trials:

# create a list with all excluded subject ids and print the list:

subjects_excluded = unique(dt_acc$subject[dt_acc$exclude == "yes"])

print(subjects_excluded)## [1] "sub-24" "sub-31" "sub-37" "sub-40"We calculate the mean behavioral accuracy across all three task conditions (slow, sequence, and repetition trials), excluding participants that performed below chance on either or both sequence and repetition trials:

dt_acc_mean = dt_acc %>%

# filter out all data of excluded participants:

filter(!(subject %in% unique(subject[exclude == "yes"]))) %>%

# check if the number of participants matches expectations:

verify(length(unique(subject)) == 36) %>%

setDT(.) %>%

# calculate mean behavioral accuracy across participants for each condition:

.[, by = .(condition), {

ttest_results = t.test(

mean_accuracy, mu = chance_level, alternative = "greater")

list(

pvalue = ttest_results$p.value,

pvalue_rounded = round_pvalues(ttest_results$p.value),

tvalue = round(ttest_results$statistic, digits = 2),

conf_lb = round(ttest_results$conf.int[1], digits = 2),

conf_ub = round(ttest_results$conf.int[2], digits = 2),

df = ttest_results$parameter,

num_subs = .N,

mean_accuracy = round(mean(mean_accuracy), digits = 2),

SD = round(sd(mean_accuracy), digits = 2),

cohens_d = round((mean(mean_accuracy) - chance_level) / sd(mean_accuracy), 2),

sem_upper = mean(mean_accuracy) + (sd(mean_accuracy)/sqrt(.N)),

sem_lower = mean(mean_accuracy) - (sd(mean_accuracy)/sqrt(.N))

)}] %>%

verify(num_subs == 36) %>%

# create a short name for the conditions:

mutate(condition_short = substr(condition, start = 1, stop = 3)) %>%

# reorder the condition factor in descending order of accuracy:

transform(condition_short = fct_reorder(condition_short, mean_accuracy, .desc = TRUE))

# show the table (https://rstudio.github.io/distill/tables.html):

rmarkdown::paged_table(dt_acc_mean)2.2.3.2 Above-chance performance

We plot only data of above-chance performers:

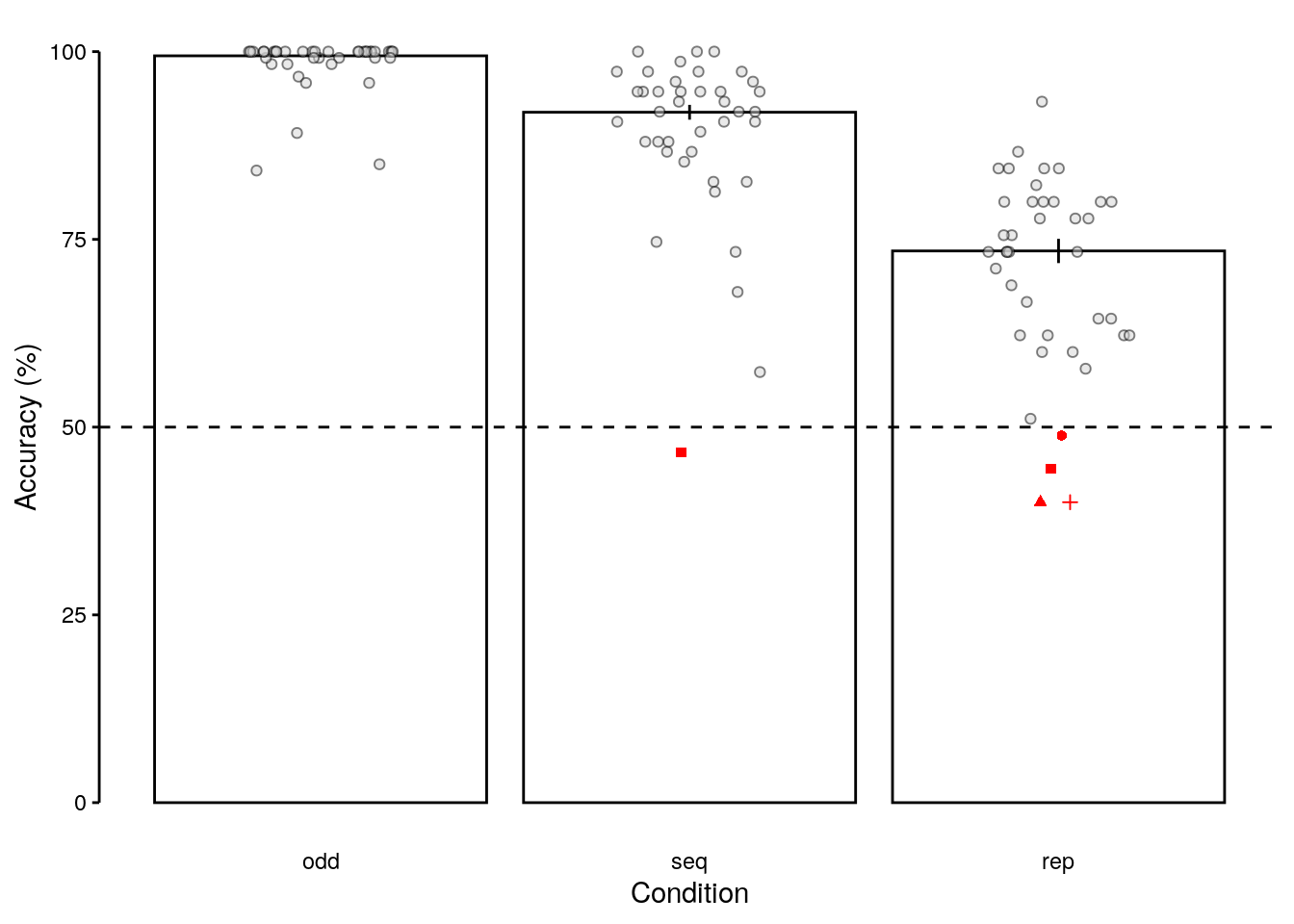

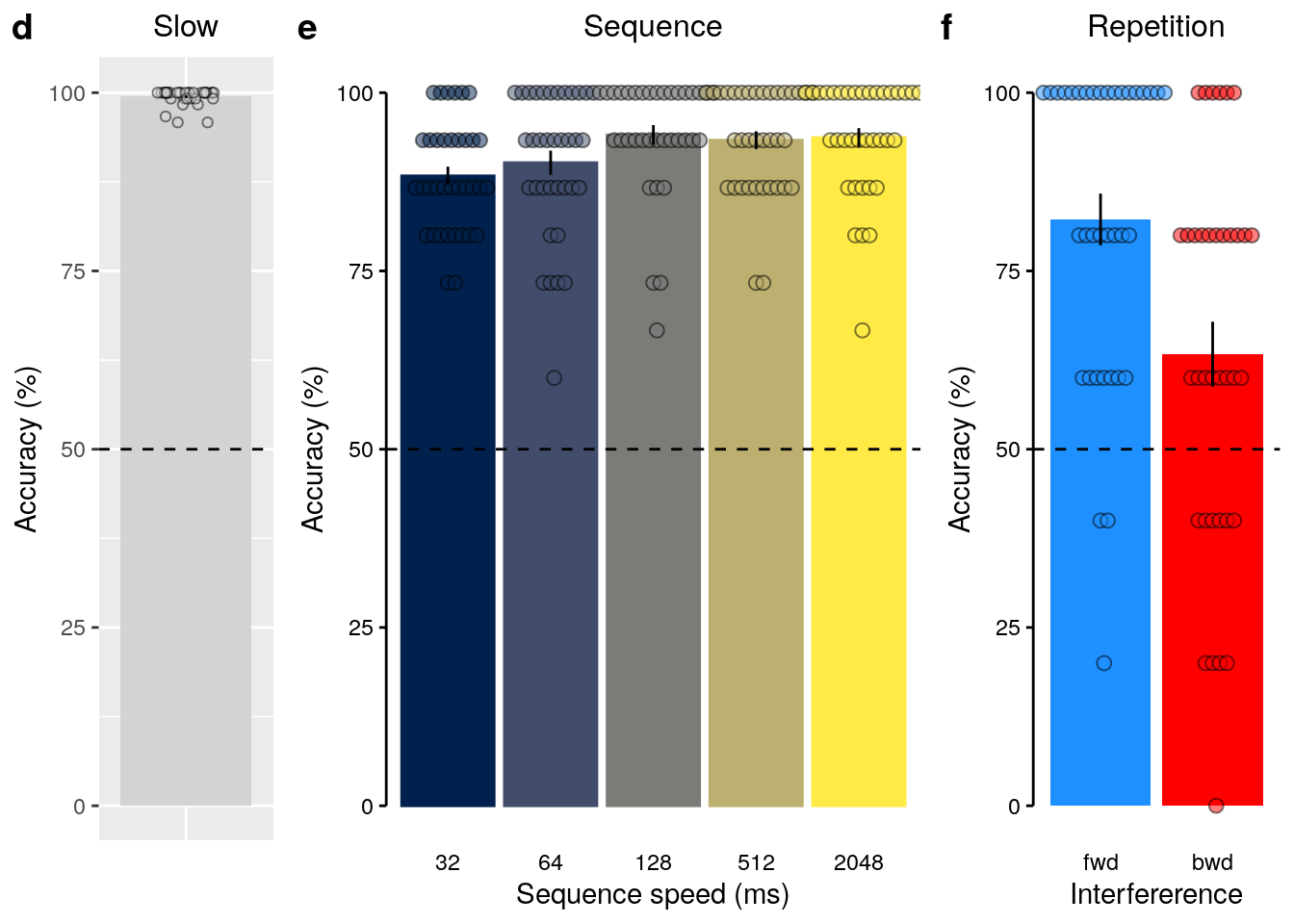

2.2.3.3 Figure S1a

We plot data of all participants with below chance performers highlighted in red.

fig_behav_all_outlier = ggplot(data = dt_acc_mean,

mapping = aes(x = as.factor(condition_short), y = as.numeric(mean_accuracy),

group = as.factor(condition_short), fill = as.factor(condition_short))) +

geom_bar(aes(fill = as.factor(condition)), stat = "identity",

color = "black", fill = "white") +

geom_point(data = subset(dt_acc, exclude == "no"),

aes(color = as.factor(exclude)),

position = position_jitter(width = 0.2, height = 0, seed = 2),

alpha = 0.5, inherit.aes = TRUE, pch = 21,

color = "black", fill = "lightgray") +

geom_point(data = subset(dt_acc, exclude == "yes"),

aes(color = as.factor(exclude), shape = as.factor(subject)),

position = position_jitter(width = 0.05, height = 0, seed = 4),

alpha = 1, inherit.aes = TRUE, color = "red") +

geom_errorbar(aes(ymin = sem_lower, ymax = sem_upper), width = 0.0, color = "black") +

ylab("Accuracy (%)") + xlab("Condition") +

scale_color_manual(values = c("darkgray", "red"), name = "Outlier") +

geom_hline(aes(yintercept = 50), linetype = "dashed", color = "black") +

coord_capped_cart(left = "both", bottom = "none", expand = TRUE, ylim = c(0, 100)) +

theme(axis.ticks.x = element_line(color = "white"),

axis.line.x = element_line(color = "white")) +

guides(shape = FALSE, fill = FALSE) +

theme(axis.text = element_text(color = "black")) +

theme(axis.ticks = element_line(color = "black")) +

theme(axis.line.y = element_line(colour = "black"),

axis.ticks.x = element_blank(),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

fig_behav_all_outlier

2.2.3.4 Source Data File Fig. S1a

dt_acc %>%

select(-num_trials) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_s1a.csv"),

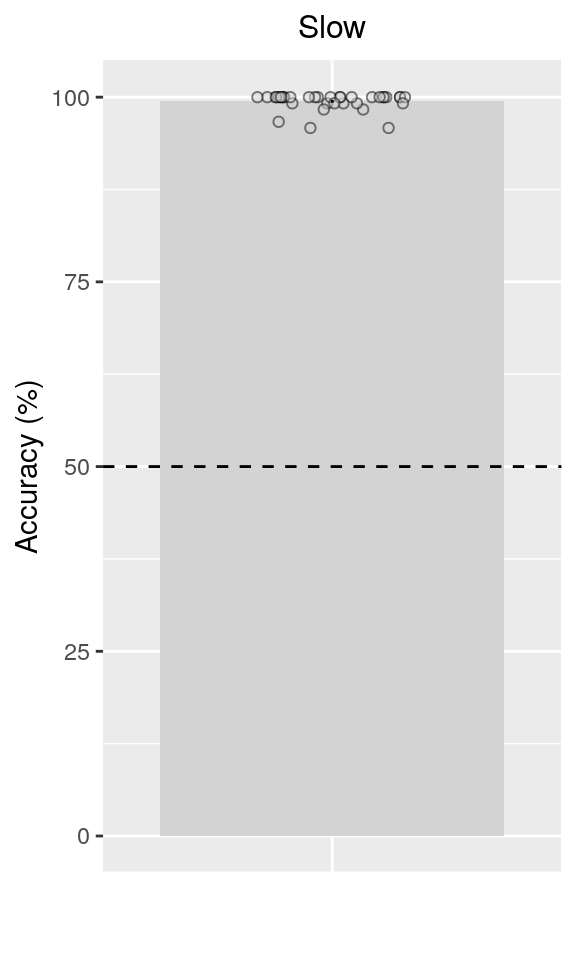

row.names = FALSE)2.2.4 Slow trials

2.2.4.1 Mean accuracy (all trials)

We calculate the mean accuracy on slow trials (oddball task condition) across all trials in the final sample (only participants who performed above chance):

# we use the dataframe containing the accuracy data

dt_acc_odd = dt_acc %>%

# filter for oddball / slow trials only:

filter(condition == "oddball") %>%

# exclude participants with below chance performance::

filter(!(subject %in% subjects_excluded)) %>%

# verify that the number of participants (final sample) is correct:

verify(all(.N == 36))We plot the mean behavioral accuracy on slow trials (oddball task condition) in the final sample:

fig_behav_odd = ggplot(data = dt_acc_odd, aes(

x = "mean_acc", y = as.numeric(mean_accuracy))) +

geom_bar(stat = "summary", fun = "mean", fill = "lightgray") +

#geom_dotplot(binaxis = "y", stackdir = "center", stackratio = 0.5,

# color = "black", fill = "lightgray", alpha = 0.5,

# inherit.aes = TRUE, binwidth = 0.5) +

geom_point(position = position_jitter(width = 0.2, height = 0, seed = 2),

alpha = 0.5, inherit.aes = TRUE, pch = 21,

color = "black", fill = "lightgray") +

geom_errorbar(stat = "summary", fun.data = "mean_se", width = 0.0, color = "black") +

ylab("Accuracy (%)") + xlab("Condition") +

scale_color_manual(values = c("darkgray", "red"), name = "Outlier") +

geom_hline(aes(yintercept = 50), linetype = "dashed", color = "black") +

#coord_capped_cart(left = "both", bottom = "none", expand = TRUE, ylim = c(90, 100)) +

theme(plot.title = element_text(size = 12, face = "plain")) +

theme(axis.ticks.x = element_line(color = "white"),

axis.line.x = element_line(color = "white")) +

theme(axis.title.x = element_text(color = "white"),

axis.text.x = element_text(color = "white")) +

ggtitle("Slow") +

theme(plot.title = element_text(hjust = 0.5))

fig_behav_odd

2.2.4.2 Mean accuracy (per run)

We calculate the mean behavioral accuracy on slow trials (oddball task condition) for each of the eight task runs for each participant:

# calculate the mean accuracy per session and run for every participant:

dt_odd_behav_run_sub = dt_events %>%

# exclude participants performing below chance:

filter(!(subject %in% subjects_excluded)) %>%

# select only oddball condition and stimulus events:

filter(condition == "oddball" & trial_type == "stimulus") %>%

# filter for upside-down trials (oddballs) only:

filter(stim_orient == 180) %>%

setDT(.) %>%

# calculate mean accuracy per session and run:

.[, by = .(subject, session, run_study, run_session), .(

mean_accuracy = mean(accuracy))] %>%

# express accuracy in percent by multiplying with 100:

transform(mean_accuracy = mean_accuracy * 100) %>%

# check whether the mean accuracy is within the expected range of 0 to 100:

assert(within_bounds(lower.bound = 0, upper.bound = 100), mean_accuracy)We calculate the mean behavioral accuracy on slow trials (oddball task condition) for each of the eight task runs across participants:

# calculate mean accuracy per session and run across participants:

dt_odd_behav_run_mean = dt_odd_behav_run_sub %>%

setDT(.) %>%

# average across participants:

.[, by = .(session, run_study, run_session), .(

mean_accuracy = mean(mean_accuracy),

num_subs = .N,

sem_upper = mean(mean_accuracy) + (sd(mean_accuracy)/sqrt(.N)),

sem_lower = mean(mean_accuracy) - (sd(mean_accuracy)/sqrt(.N))

)] %>%

verify(num_subs == 36) %>%

# z-score the accuracy values:

mutate(mean_accuracy_z = scale(mean_accuracy, scale = TRUE, center = TRUE))We run a LME model to test the linear effect of task run on behavioral accuracy:

lme_odd_behav_run = lmerTest::lmer(

mean_accuracy ~ run_study + (1 + run_study | subject),

data = dt_odd_behav_run_sub, na.action = na.omit, control = lcctrl)

summary(lme_odd_behav_run)

anova(lme_odd_behav_run)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: mean_accuracy ~ run_study + (1 + run_study | subject)

## Data: dt_odd_behav_run_sub

## Control: lcctrl

##

## REML criterion at convergence: 1250.2

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -6.7158 0.1095 0.1285 0.1571 1.9026

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## subject (Intercept) 0.12000 0.3464

## run_study 0.01038 0.1019 1.00

## Residual 3.97535 1.9938

## Number of obs: 288, groups: subject, 36

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 99.53650 0.26529 135.52431 375.201 <2e-16 ***

## run_study -0.01969 0.05401 92.71468 -0.365 0.716

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## run_study -0.757

## Type III Analysis of Variance Table with Satterthwaite's method

## Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

## run_study 0.52843 0.52843 1 92.715 0.1329 0.7162We run a second model to test run- and session-specific effects:

dt <- dt_odd_behav_run_sub %>%

transform(run_session = as.factor(paste0("run-0", run_session)),

session = as.factor(paste0("ses-0", session)))lme_odd_behav_run = lmerTest::lmer(

mean_accuracy ~ session + run_session + (1 + session + run_session | subject),

data = dt, na.action = na.omit, control = lcctrl)

summary(lme_odd_behav_run)

emmeans(lme_odd_behav_run, list(pairwise ~ run_session | session))

anova(lme_odd_behav_run)

rm(dt)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: mean_accuracy ~ session + run_session + (1 + session + run_session |

## subject)

## Data: dt

## Control: lcctrl

##

## REML criterion at convergence: 1192.3

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -4.2095 -0.0252 0.1378 0.2041 2.8277

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## subject (Intercept) 0.1958 0.4425

## sessionses-02 2.2398 1.4966 -0.73

## run_sessionrun-02 0.4736 0.6882 0.44 0.09

## run_sessionrun-03 0.8393 0.9161 0.70 -0.01 0.78

## run_sessionrun-04 4.2997 2.0736 0.90 -0.77 0.02 0.47

## Residual 2.4305 1.5590

## Number of obs: 288, groups: subject, 36

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 99.544925 0.218252 85.583815 456.101 <2e-16 ***

## sessionses-02 0.049649 0.309796 35.649869 0.160 0.874

## run_sessionrun-02 -0.054933 0.284024 67.397962 -0.193 0.847

## run_sessionrun-03 -0.009178 0.301376 54.333815 -0.030 0.976

## run_sessionrun-04 -0.423351 0.432376 37.096544 -0.979 0.334

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr) sss-02 rn_-02 rn_-03

## sessinss-02 -0.447

## rn_sssnr-02 -0.485 0.031

## rn_sssnr-03 -0.394 -0.006 0.555

## rn_sssnr-04 -0.115 -0.498 0.281 0.449

## $`emmeans of run_session | session`

## session = ses-01:

## run_session emmean SE df lower.CL upper.CL

## run-01 99.54 0.2183 35 99.10 99.99

## run-02 99.49 0.2611 35 98.96 100.02

## run-03 99.54 0.2943 35 98.94 100.13

## run-04 99.12 0.4614 35 98.18 100.06

##

## session = ses-02:

## run_session emmean SE df lower.CL upper.CL

## run-01 99.59 0.2883 35 99.01 100.18

## run-02 99.54 0.3302 35 98.87 100.21

## run-03 99.59 0.3478 35 98.88 100.29

## run-04 99.17 0.3389 35 98.48 99.86

##

## Degrees-of-freedom method: kenward-roger

## Confidence level used: 0.95

##

## $`pairwise differences of run_session | session`

## session = ses-01:

## contrast estimate SE df t.ratio p.value

## (run-01) - (run-02) 0.05493 0.284 35 0.193 0.9974

## (run-01) - (run-03) 0.00918 0.301 35 0.030 1.0000

## (run-01) - (run-04) 0.42335 0.432 35 0.979 0.7621

## (run-02) - (run-03) -0.04575 0.277 35 -0.165 0.9984

## (run-02) - (run-04) 0.36842 0.446 35 0.827 0.8415

## (run-03) - (run-04) 0.41417 0.401 35 1.033 0.7314

##

## session = ses-02:

## contrast estimate SE df t.ratio p.value

## (run-01) - (run-02) 0.05493 0.284 35 0.193 0.9974

## (run-01) - (run-03) 0.00918 0.301 35 0.030 1.0000

## (run-01) - (run-04) 0.42335 0.432 35 0.979 0.7621

## (run-02) - (run-03) -0.04575 0.277 35 -0.165 0.9984

## (run-02) - (run-04) 0.36842 0.446 35 0.827 0.8415

## (run-03) - (run-04) 0.41417 0.401 35 1.033 0.7314

##

## Degrees-of-freedom method: kenward-roger

## P value adjustment: tukey method for comparing a family of 4 estimates

##

## Type III Analysis of Variance Table with Satterthwaite's method

## Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

## session 0.06243 0.06243 1 35.650 0.0257 0.8736

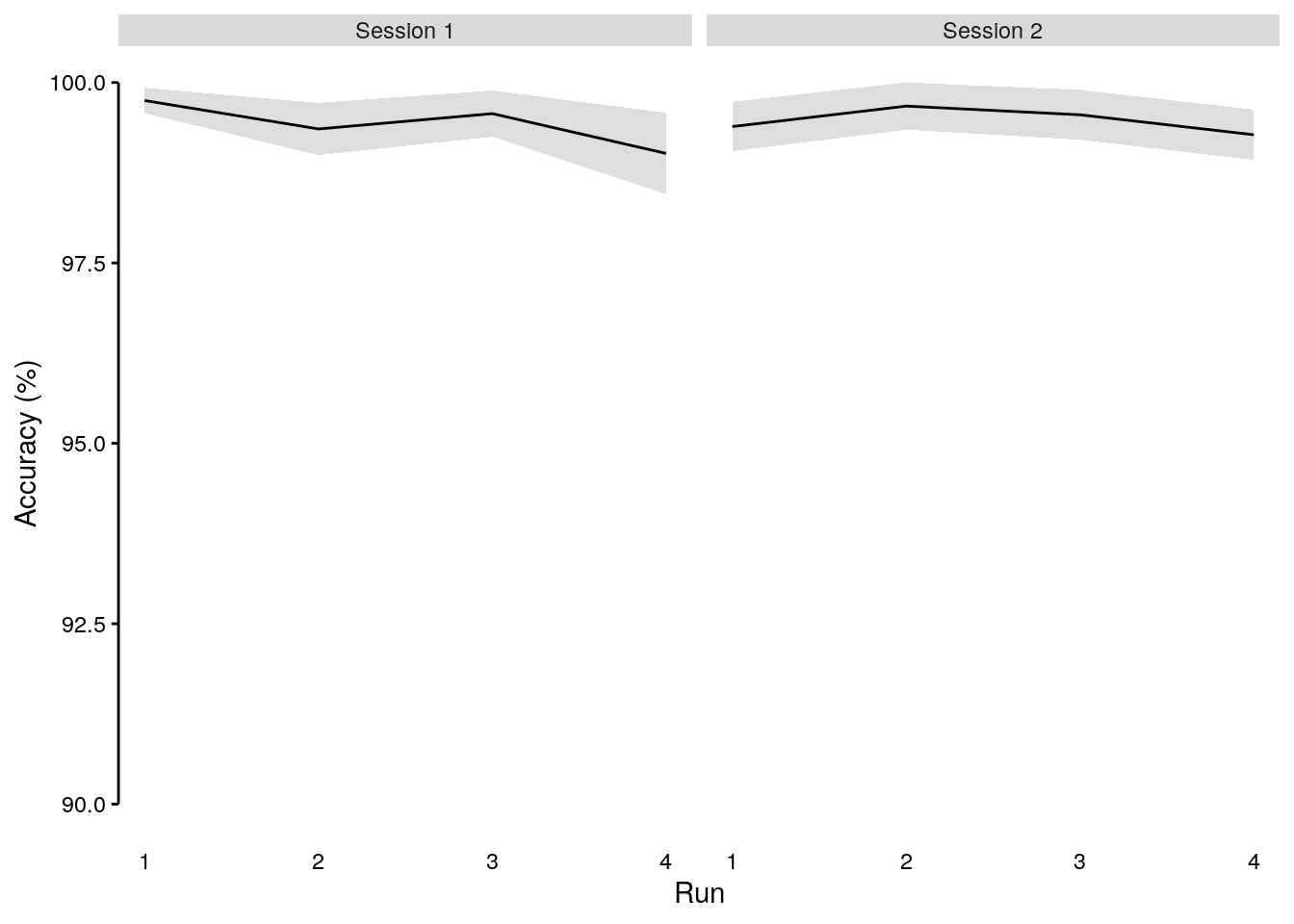

## run_session 2.90689 0.96896 3 50.201 0.3987 0.75452.2.4.3 Figure S1c

We plot the behavioral accuracy on slow trials (oddball task condition) across task runs (x-axis) for each study session (panels):

# change labels of the facet:

facet_labels_new = unique(paste0("Session ", dt_events$session))

facet_labels_old = as.character(unique(dt_events$session))

names(facet_labels_new) = facet_labels_old

# plot behavioral accuracy across runs:

plot_odd_run = ggplot(data = dt_odd_behav_run_mean, mapping = aes(

y = as.numeric(mean_accuracy), x = as.numeric(run_session))) +

geom_ribbon(aes(ymin = sem_lower, ymax = sem_upper), alpha = 0.5, fill = "gray") +

geom_line(color = "black") +

facet_wrap(~ as.factor(session), labeller = as_labeller(facet_labels_new)) +

ylab("Accuracy (%)") + xlab("Run") +

ylim(c(90, 100)) +

coord_capped_cart(left = "both", bottom = "both", expand = TRUE, ylim = c(90,100)) +

theme(axis.ticks.x = element_text(color = "white"),

axis.line.x = element_line(color = "white")) +

theme(strip.text.x = element_text(margin = margin(b = 2, t = 2))) +

theme(axis.text = element_text(color = "black")) +

theme(axis.ticks = element_line(color = "black")) +

theme(axis.line.y = element_line(colour = "black"),

axis.ticks.x = element_blank(),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

plot_odd_run

2.2.4.4 Source Data File Fig. S1c

dt_odd_behav_run_mean %>%

select(-num_subs, -mean_accuracy_z) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_s1c.csv"),

row.names = FALSE)2.2.4.5 Misses vs. false alarms

We calculate the mean frequency of misses (missed response to upside-down images) and false alarms (incorrect response to upright images):

dt_odd_behav_sdt_sub = dt_events %>%

# exclude participants performing below chance:

filter(!(subject %in% subjects_excluded)) %>%

# select only oddball condition and stimulus events:

filter(condition == "oddball" & trial_type == "stimulus") %>%

setDT(.) %>%

# create new variable with number of upside-down / upright stimuli per run:

.[, by = .(subject, session, run_session, stim_orient), ":=" (

num_orient = .N

)] %>%

# get the number of signal detection trial types for each run:

.[, by = .(subject, session, run_session, sdt_type), .(

num_trials = .N,

freq = .N/unique(num_orient)

)] %>%

# add missing values:

complete(nesting(subject, session, run_session), nesting(sdt_type),

fill = list(num_trials = 0, freq = 0)) %>%

transform(freq = freq * 100) %>%

filter(sdt_type %in% c("false alarm", "miss"))We run a LME model to test the effect of signal detection type (miss vs. false alarm), task run and session on the frequency of those events:

lme_odd_behav_sdt = lmer(

freq ~ sdt_type + run_session * session + (1 + run_session + session | subject),

data = subset(dt_odd_behav_sdt_sub), na.action = na.omit, control = lcctrl)

summary(lme_odd_behav_sdt)

anova(lme_odd_behav_sdt)

emmeans_results = emmeans(lme_odd_behav_sdt, list(pairwise ~ sdt_type))

emmeans_pvalues = round_pvalues(summary(emmeans_results[[2]])$p.value)

emmeans_results## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: freq ~ sdt_type + run_session * session + (1 + run_session +

## session | subject)

## Data: subset(dt_odd_behav_sdt_sub)

## Control: lcctrl

##

## REML criterion at convergence: 2164.6

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -2.3848 -0.2865 -0.1767 -0.0525 7.7856

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## subject (Intercept) 0.12419 0.3524

## run_session 0.09805 0.3131 0.37

## session 0.32394 0.5692 -0.94 -0.66

## Residual 2.21395 1.4879

## Number of obs: 576, groups: subject, 36

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 0.10130 0.48776 457.56239 0.208 0.8356

## sdt_typemiss 0.25175 0.12399 501.00009 2.030 0.0429 *

## run_session 0.06991 0.18296 442.67822 0.382 0.7026

## session 0.05953 0.31819 366.45275 0.187 0.8517

## run_session:session -0.01733 0.11090 501.00010 -0.156 0.8759

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr) sdt_ty rn_sss sessin

## sdt_typemss -0.127

## run_session -0.849 0.000

## session -0.925 0.000 0.736

## rn_sssn:sss 0.853 0.000 -0.909 -0.871

## Type III Analysis of Variance Table with Satterthwaite's method

## Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

## sdt_type 9.1265 9.1265 1 501.00 4.1223 0.04285 *

## run_session 0.3232 0.3232 1 442.68 0.1460 0.70258

## session 0.0775 0.0775 1 366.45 0.0350 0.85169

## run_session:session 0.0541 0.0541 1 501.00 0.0244 0.87587

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

## $`emmeans of sdt_type`

## sdt_type emmean SE df lower.CL upper.CL

## false alarm 0.300 0.118 66.4 0.0656 0.535

## miss 0.552 0.118 66.4 0.3174 0.787

##

## Results are averaged over the levels of: session

## Degrees-of-freedom method: kenward-roger

## Confidence level used: 0.95

##

## $`pairwise differences of sdt_type`

## contrast estimate SE df t.ratio p.value

## false alarm - miss -0.252 0.124 466 -2.030 0.0429

##

## Results are averaged over the levels of: session

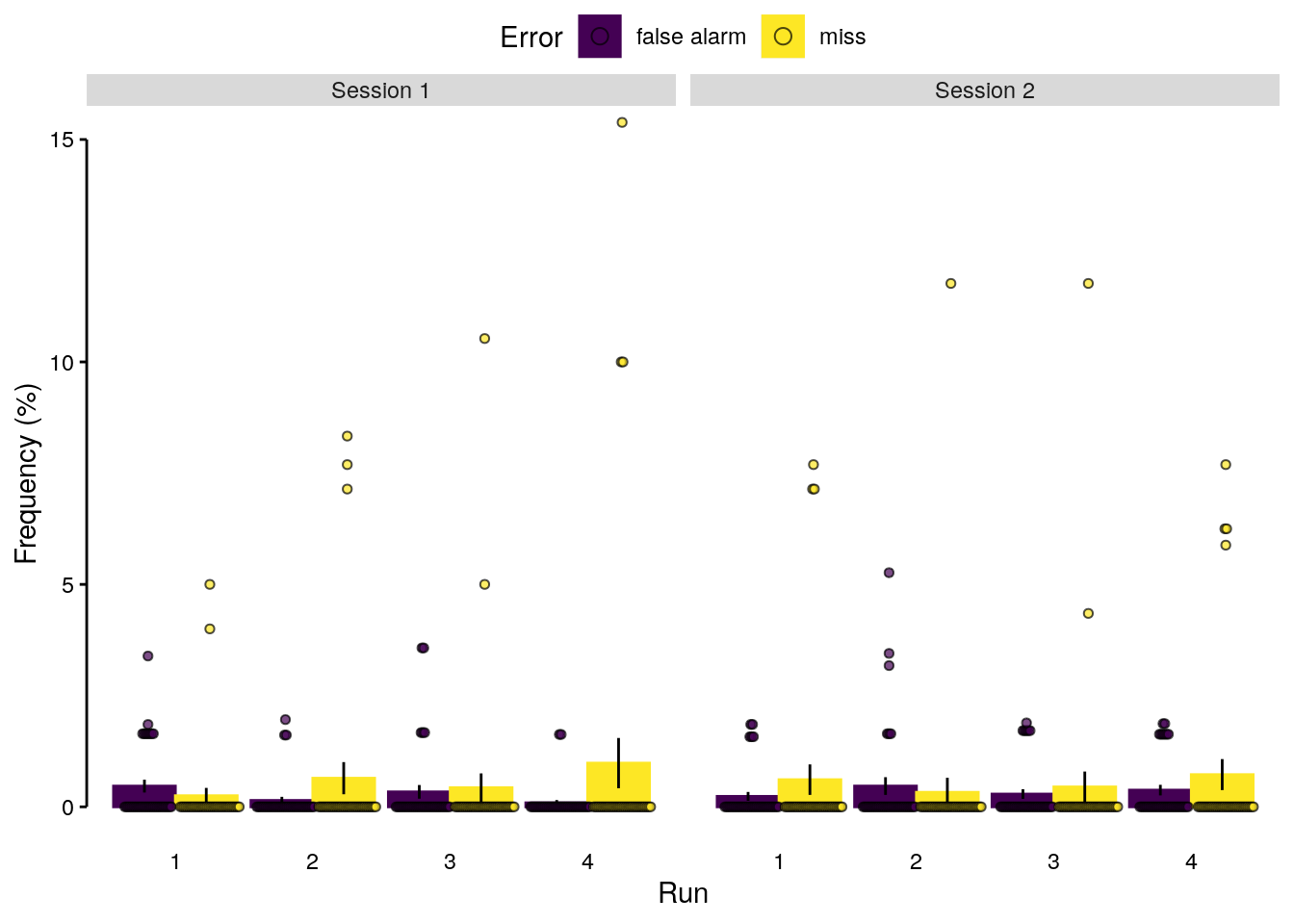

## Degrees-of-freedom method: kenward-roger2.2.4.6 Figure S1b

We plot the frequency of misses and false alarms as a function of task run and study session:

plot_odd_sdt = ggplot(data = dt_odd_behav_sdt_sub, mapping = aes(

y = as.numeric(freq), x = as.numeric(run_session),

fill = as.factor(sdt_type), color = as.factor(sdt_type))) +

stat_summary(geom = "bar", fun = mean, position = position_dodge(),

na.rm = TRUE) +

stat_summary(geom = "errorbar", fun.data = mean_se,

position = position_dodge(0.9), width = 0, color = "black") +

facet_wrap(~ as.factor(session), labeller = as_labeller(facet_labels_new)) +

geom_dotplot(

aes(group = interaction(run_session, session, sdt_type)),

binaxis = "y", stackdir = "center", stackratio = 0.2, alpha = 0.7,

inherit.aes = TRUE, binwidth = 0.2, position = position_dodge(),

color = "black") +

ylab("Frequency (%)") + xlab("Run") +

coord_capped_cart(left = "both", bottom = "both",

expand = TRUE, ylim = c(0, 15)) +

scale_fill_viridis(name = "Error", discrete = TRUE) +

scale_color_viridis(name = "Error", discrete = TRUE) +

theme(strip.text.x = element_text(margin = margin(b = 2, t = 2))) +

theme(legend.position = "top", legend.direction = "horizontal",

legend.justification = "center", legend.margin = margin(0, 0, 0, 0),

legend.box.margin = margin(t = 0, r = 0, b = -5, l = 0)) +

theme(axis.text = element_text(color = "black")) +

theme(axis.ticks.x = element_line(color = "white")) +

theme(axis.line.x = element_line(color = "white")) +

theme(axis.ticks.y = element_line(color = "black")) +

theme(axis.line.y = element_line(colour = "black"),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

plot_odd_sdt

2.2.4.7 Source Data File Fig. S1b

dt_odd_behav_sdt_sub %>%

select(-num_trials) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_s1b.csv"),

row.names = FALSE)2.2.5 Sequence trials

2.2.5.1 Effect of sequence speed

We calculate the mean behavioral accuracy on sequence trials for each of the five sequence speeds (inter-stimulus intervals):

dt_seq_behav = dt_events %>%

# filter behavioral events data for sequence trials only:

filter(condition == "sequence") %>%

setDT(.) %>%

# create additional variables to describe each trial:

.[, by = .(subject, trial), ":=" (

trial_key_down = ifelse(any(key_down == 1, na.rm = TRUE), 1, 0),

trial_accuracy = ifelse(any(accuracy == 1, na.rm = TRUE), 1, 0),

trial_target_position = serial_position[which(target == 1)],

trial_speed = unique(interval_time[which(!is.na(interval_time))])

)] %>%

# filter for choice trials only:

filter(trial_type == "choice") %>%

setDT(.) %>%

# group speed conditions into fast and slow conditions:

mutate(speed = ifelse(trial_speed %in% c(2.048, 0.512), "slow", "fast")) %>%

# define variable factors of interest as numeric:

transform(trial_speed = as.numeric(trial_speed)) %>%

transform(trial_target_position = as.numeric(trial_target_position)) %>%

setDT(.)dt_seq_behav_speed = dt_seq_behav %>%

# filter out excluded subjects:

filter(!(subject %in% subjects_excluded)) %>%

setDT(.) %>%

# average accuracy for each participant:

.[, by = .(subject, trial_speed), .(

num_trials = .N,

mean_accuracy = mean(accuracy)

)] %>%

transform(mean_accuracy = mean_accuracy * 100) %>%

setDT(.) %>%

verify(all(num_trials == 15)) %>%

verify(.[, by = .(trial_speed), .(

num_subjects = .N

)]$num_subjects == 36) %>%

setorder(subject, trial_speed) %>%

mutate(trial_speed = as.numeric(trial_speed)) %>%

setDT(.)We run a LME model to test the effect of sequence speed (inter-stimulus interval) on mean behavioral accuracy on sequence trials:

lme_seq_behav = lmer(

mean_accuracy ~ trial_speed + (1 + trial_speed | subject),

data = dt_seq_behav_speed, na.action = na.omit, control = lcctrl)

summary(lme_seq_behav)

anova(lme_seq_behav)

emmeans_results = emmeans(lme_seq_behav, list(pairwise ~ trial_speed))

emmeans_pvalues = round_pvalues(summary(emmeans_results[[2]])$p.value)

emmeans_results

emmeans_pvalues## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: mean_accuracy ~ trial_speed + (1 + trial_speed | subject)

## Data: dt_seq_behav_speed

## Control: lcctrl

##

## REML criterion at convergence: 1245.4

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -2.8011 -0.4849 0.2106 0.6766 2.3025

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## subject (Intercept) 32.535 5.704

## trial_speed 3.969 1.992 -0.58

## Residual 44.342 6.659

## Number of obs: 180, groups: subject, 36

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 91.0872 1.1316 35.0000 80.495 <2e-16 ***

## trial_speed 1.5063 0.7287 35.0000 2.067 0.0462 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## trial_speed -0.508

## Type III Analysis of Variance Table with Satterthwaite's method

## Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

## trial_speed 189.49 189.49 1 35 4.2733 0.04617 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

## $`emmeans of trial_speed`

## trial_speed emmean SE df lower.CL upper.CL

## 0.557 91.9 0.989 35 89.9 93.9

##

## Degrees-of-freedom method: kenward-roger

## Confidence level used: 0.95

##

## $` of trial_speed`

## contrast estimate SE df z.ratio p.value

## (nothing) nonEst NA NA NA NA

##

## Degrees-of-freedom method: kenward-roger

##

## [1] "p = NA"We compare mean behavioral accuracy at each sequence speed level to the chancel level of 50%:

chance_level = 50

dt_seq_behav_mean = dt_seq_behav_speed %>%

# average across participants:

.[, by = .(trial_speed), {

ttest_results = t.test(

mean_accuracy, mu = chance_level, alternative = "greater")

list(

mean_accuracy = round(mean(mean_accuracy), digits = 2),

sd_accuracy = round(sd(mean_accuracy), digits = 2),

tvalue = round(ttest_results$statistic, digits = 2),

conf_lb = round(ttest_results$conf.int[1], digits = 2),

conf_ub = round(ttest_results$conf.int[2], digits = 2),

pvalue = ttest_results$p.value,

cohens_d = round((mean(mean_accuracy) - chance_level)/sd(mean_accuracy), 2),

df = ttest_results$parameter,

num_subs = .N,

sem_upper = mean(mean_accuracy) + (sd(mean_accuracy)/sqrt(.N)),

sem_lower = mean(mean_accuracy) - (sd(mean_accuracy)/sqrt(.N))

)}] %>%

verify(num_subs == 36) %>%

mutate(sem_range = sem_upper - sem_lower) %>%

mutate(pvalue_adjust = p.adjust(pvalue, method = "fdr")) %>%

mutate(pvalue_adjust_round = round_pvalues(pvalue_adjust))

# print paged table:

rmarkdown::paged_table(dt_seq_behav_mean)We calculate the reduction in mean behavioral accuracy comparing the fastest and slowest speed condition:

a = dt_seq_behav_mean$mean_accuracy[dt_seq_behav_mean$trial_speed == 2.048]

b = dt_seq_behav_mean$mean_accuracy[dt_seq_behav_mean$trial_speed == 0.032]

reduced_acc = round((1 - (b/a)) * 100, 2)

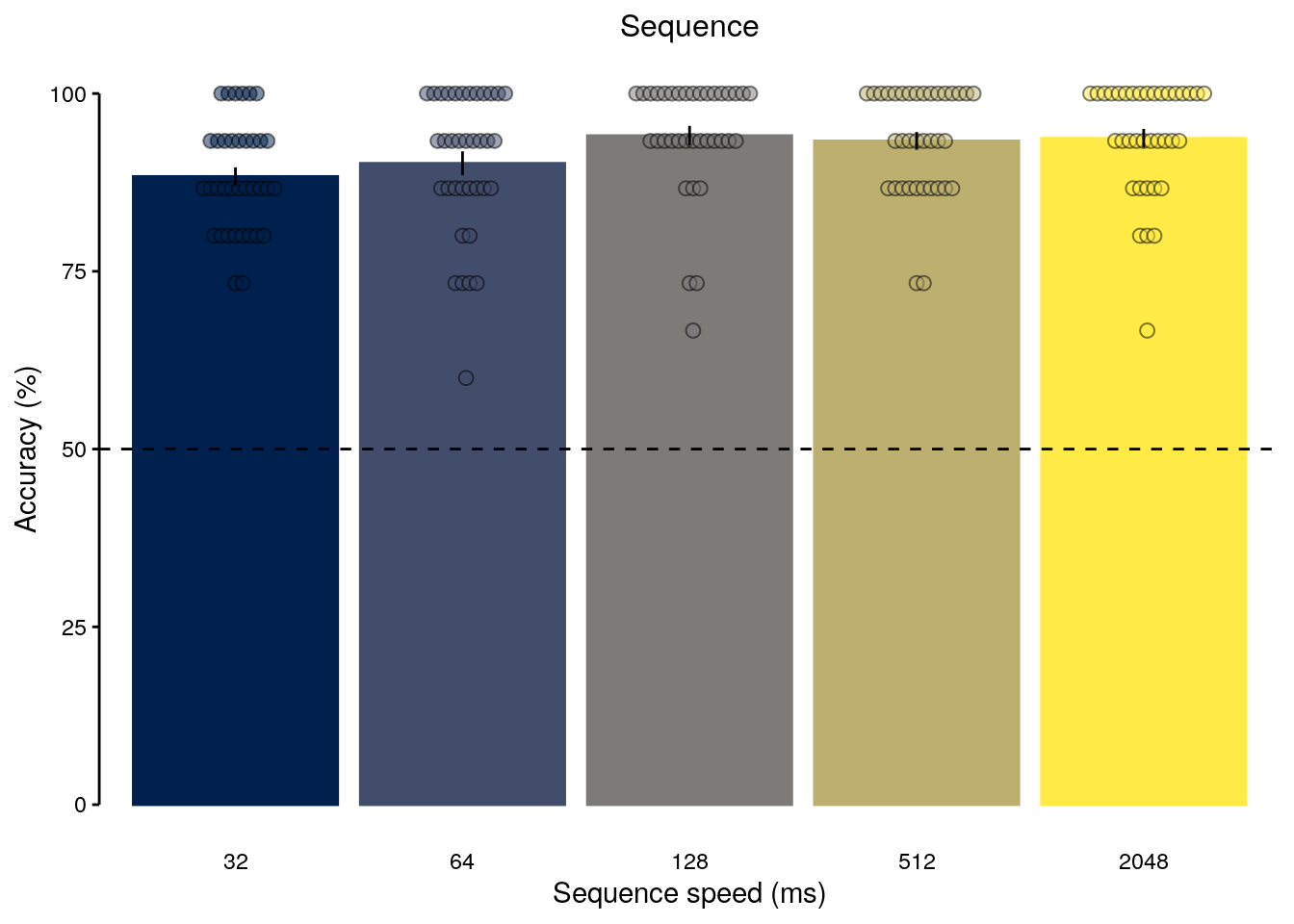

sprintf("reduction in accuracy: %.2f", reduced_acc)## [1] "reduction in accuracy: 5.73"2.2.5.2 Figure 1e

fig_seq_speed = ggplot(data = dt_seq_behav_speed, mapping = aes(

y = as.numeric(mean_accuracy), x = as.factor(as.numeric(trial_speed)*1000),

fill = as.factor(trial_speed), color = as.factor(trial_speed))) +

geom_bar(stat = "summary", fun = "mean") +

geom_dotplot(binaxis = "y", stackdir = "center", stackratio = 0.5,

color = "black", alpha = 0.5,

inherit.aes = TRUE, binwidth = 2) +

geom_errorbar(stat = "summary", fun.data = "mean_se", width = 0.0, color = "black") +

geom_hline(aes(yintercept = 50), linetype = "dashed", color = "black") +

ylab("Accuracy (%)") + xlab("Sequence speed (ms)") +

scale_fill_viridis(discrete = TRUE, guide = FALSE, option = "cividis") +

scale_color_viridis(discrete = TRUE, guide = FALSE, option = "cividis") +

coord_capped_cart(left = "both", bottom = "both", expand = TRUE, ylim = c(0, 100)) +

theme(plot.title = element_text(size = 12, face = "plain")) +

theme(axis.ticks.x = element_blank(), axis.line.x = element_blank()) +

ggtitle("Sequence") +

theme(plot.title = element_text(hjust = 0.5)) +

theme(axis.text = element_text(color = "black")) +

theme(axis.ticks.x = element_line(color = "white")) +

theme(axis.line.x = element_line(color = "white")) +

theme(axis.ticks.y = element_line(color = "black")) +

theme(axis.line.y = element_line(colour = "black"),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

fig_seq_speed

2.2.5.3 Source Data File Fig. 1e

dt_seq_behav_speed %>%

select(-num_trials) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_1e.csv"),

row.names = FALSE)2.2.5.4 Effect of target position

We calculate the mean behavioral accuracy on sequence trials for each of possible serial position of the target stimulus:

dt_seq_behav_position = dt_seq_behav %>%

# filter out excluded subjects:

filter(!(subject %in% subjects_excluded)) %>% setDT(.) %>%

# average accuracy for each participant:

.[, by = .(subject, trial_target_position), .(

num_trials = .N,

mean_accuracy = mean(accuracy)

)] %>%

verify(.[, by = .(trial_target_position), .(

num_subs = .N

)]$num_subs == 36) %>%

transform(mean_accuracy = mean_accuracy * 100) %>%

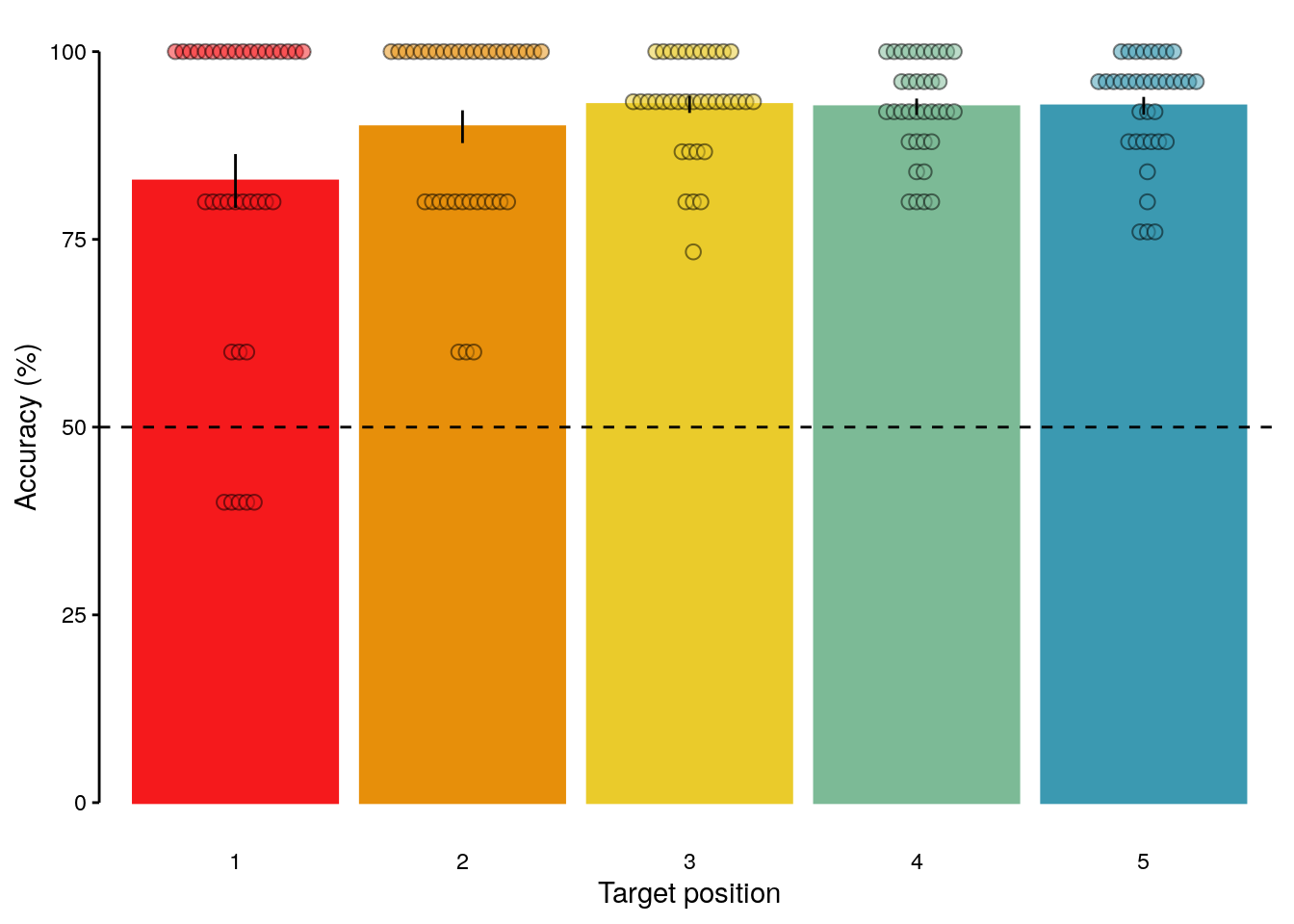

setorder(subject, trial_target_position)lme_seq_behav_position = lmer(

mean_accuracy ~ trial_target_position + (1 + trial_target_position | subject),

data = dt_seq_behav_position, na.action = na.omit, control = lcctrl)

summary(lme_seq_behav_position)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: mean_accuracy ~ trial_target_position + (1 + trial_target_position |

## subject)

## Data: dt_seq_behav_position

## Control: lcctrl

##

## REML criterion at convergence: 1385.9

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.2760 -0.4809 0.1467 0.5073 2.1940

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## subject (Intercept) 221.205 14.873

## trial_target_position 8.402 2.899 -1.00

## Residual 102.478 10.123

## Number of obs: 180, groups: subject, 36

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 83.4370 3.0456 35.9399 27.396 < 2e-16 ***

## trial_target_position 2.2667 0.7197 42.0225 3.149 0.00301 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## trl_trgt_ps -0.936anova(lme_seq_behav_position)## Type III Analysis of Variance Table with Satterthwaite's method

## Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

## trial_target_position 1016.4 1016.4 1 42.022 9.9178 0.003011 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 12.2.5.5 Figure S1d

fig_seq_position = ggplot(data = dt_seq_behav_position, mapping = aes(

y = as.numeric(mean_accuracy), x = as.factor(trial_target_position),

fill = as.factor(trial_target_position), color = as.factor(trial_target_position))) +

geom_bar(stat = "summary", fun = "mean") +

geom_dotplot(binaxis = "y", stackdir = "center", stackratio = 0.5,

color = "black", alpha = 0.5,

inherit.aes = TRUE, binwidth = 2) +

geom_errorbar(stat = "summary", fun.data = "mean_se", width = 0.0, color = "black") +

geom_hline(aes(yintercept = 50), linetype = "dashed", color = "black") +

ylab("Accuracy (%)") + xlab("Target position") +

scale_fill_manual(values = color_events, guide = FALSE) +

scale_color_manual(values = color_events, guide = FALSE) +

coord_capped_cart(left = "both", bottom = "both", expand = TRUE, ylim = c(0, 100)) +

theme(axis.ticks.x = element_blank(), axis.line.x = element_blank()) +

theme(axis.text = element_text(color = "black")) +

theme(axis.ticks.x = element_line(color = "white")) +

theme(axis.line.x = element_line(color = "white")) +

theme(axis.ticks.y = element_line(color = "black")) +

theme(axis.line.y = element_line(colour = "black"),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

fig_seq_position

2.2.5.6 Source Data File Fig. S1d

dt_seq_behav_position %>%

select(-num_trials) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_s1d.csv"),

row.names = FALSE)2.2.6 Repetition trials

2.2.6.1 Mean accuracy

We calculate mean behavioral accuracy in repetition trials for each participant:

dt_rep_behav = dt_events %>%

# filter for repetition trials only:

filter(condition == "repetition") %>% setDT(.) %>%

# create additional variables to describe each trial:

.[, by = .(subject, trial), ":=" (

trial_key_down = ifelse(any(key_down == 1, na.rm = TRUE), 1, 0),

trial_accuracy = ifelse(any(accuracy == 1, na.rm = TRUE), 1, 0),

trial_target_position = serial_position[which(target == 1)],

trial_speed = unique(interval_time[which(!is.na(interval_time))])

)] %>%

# select only choice trials that contain the accuracy data:

filter(trial_type == "choice") %>% setDT(.) %>%

verify(all(trial_accuracy == accuracy)) %>%

# average across trials separately for each participant:

.[, by = .(subject, trial_target_position), .(

num_trials = .N,

mean_accuracy = mean(accuracy)

)] %>%

verify(all(num_trials == 5)) %>%

# transform mean accuracy into percent (%)

transform(mean_accuracy = mean_accuracy * 100) %>%

# check if accuracy values range between 0 and 100

verify(between(x = mean_accuracy, lower = 0, upper = 100)) %>%

mutate(interference = ifelse(

trial_target_position == 2, "fwd", trial_target_position)) %>%

transform(interference = ifelse(

trial_target_position == 9, "bwd", interference))We run separate one-sided one-sample t-tests to access if mean behavioral performance for every repetition condition differs from a 50% chance level:

chance_level = 50

dt_rep_behav_chance = dt_rep_behav %>%

# filter out excluded subjects:

filter(!(subject %in% subjects_excluded)) %>%

setDT(.) %>%

# average across participants:

.[, by = .(trial_target_position), {

ttest_results = t.test(

mean_accuracy, mu = chance_level, alternative = "greater")

list(

mean_accuracy = round(mean(mean_accuracy), digits = 2),

sd_accuracy = round(sd(mean_accuracy), digits = 2),

tvalue = round(ttest_results$statistic, digits = 2),

pvalue = ttest_results$p.value,

conf_lb = round(ttest_results$conf.int[1], digits = 2),

conf_ub = round(ttest_results$conf.int[2], digits = 2),

cohens_d = round((mean(mean_accuracy) - chance_level)/sd(mean_accuracy), 2),

df = ttest_results$parameter,

num_subs = .N,

sem_upper = mean(mean_accuracy) + (sd(mean_accuracy)/sqrt(.N)),

sem_lower = mean(mean_accuracy) - (sd(mean_accuracy)/sqrt(.N))

)}] %>% verify(num_subs == 36) %>%

mutate(sem_range = sem_upper - sem_lower) %>%

setDT(.) %>%

filter(trial_target_position %in% seq(2,9)) %>%

# create additional variable to label forward and backward interference:

mutate(interference = ifelse(

trial_target_position == 2, "fwd", trial_target_position)) %>%

transform(interference = ifelse(

trial_target_position == 9, "bwd", interference)) %>%

mutate(pvalue_adjust = p.adjust(pvalue, method = "fdr")) %>%

mutate(pvalue_adjust_round = round_pvalues(pvalue_adjust)) %>%

mutate(significance = ifelse(pvalue_adjust < 0.05, "*", "")) %>%

mutate(cohens_d = paste0("d = ", cohens_d, significance)) %>%

mutate(label = paste0(

trial_target_position - 1, "/", 10 - trial_target_position)) %>%

setDT(.) %>%

setorder(trial_target_position)

# print table:

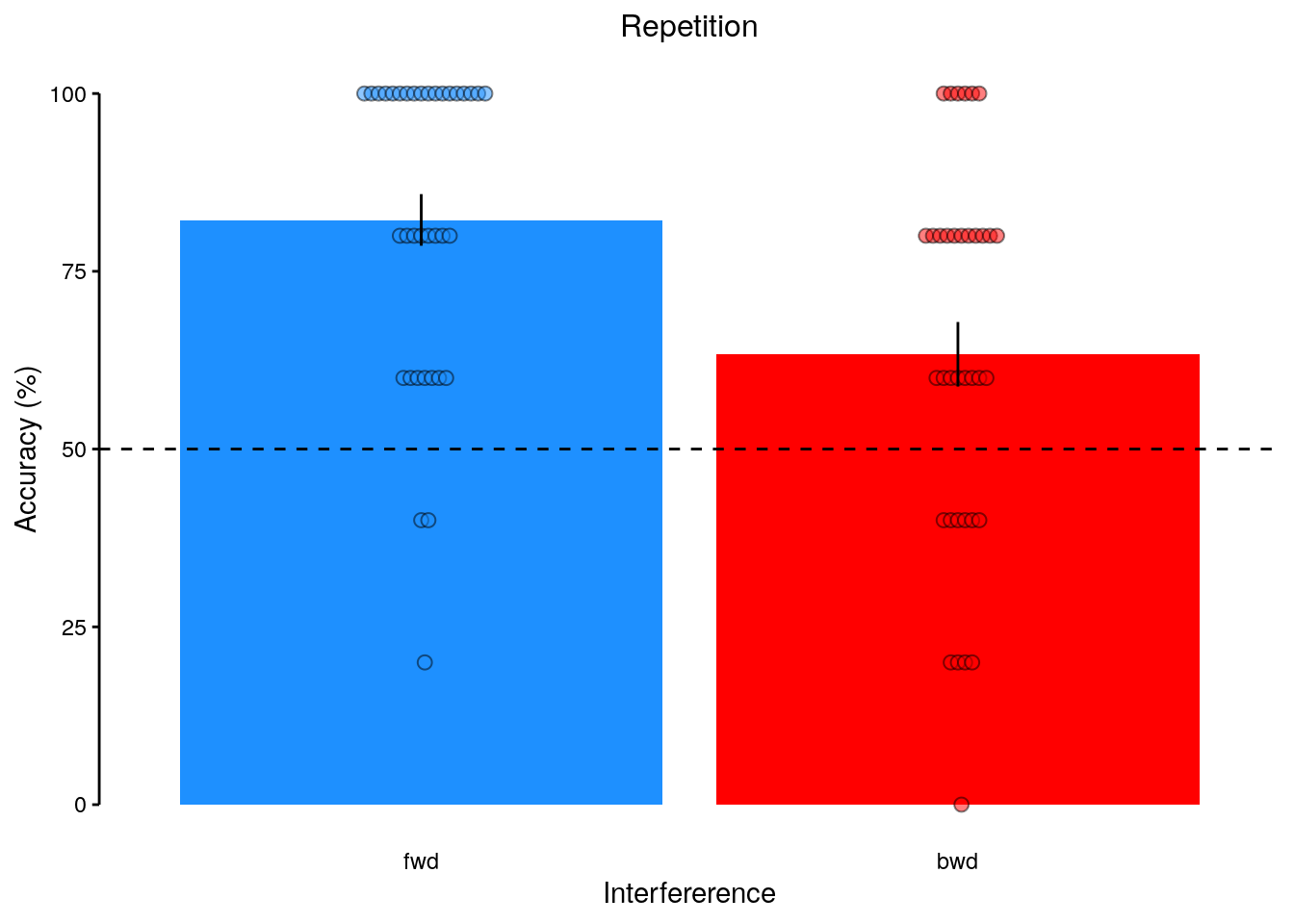

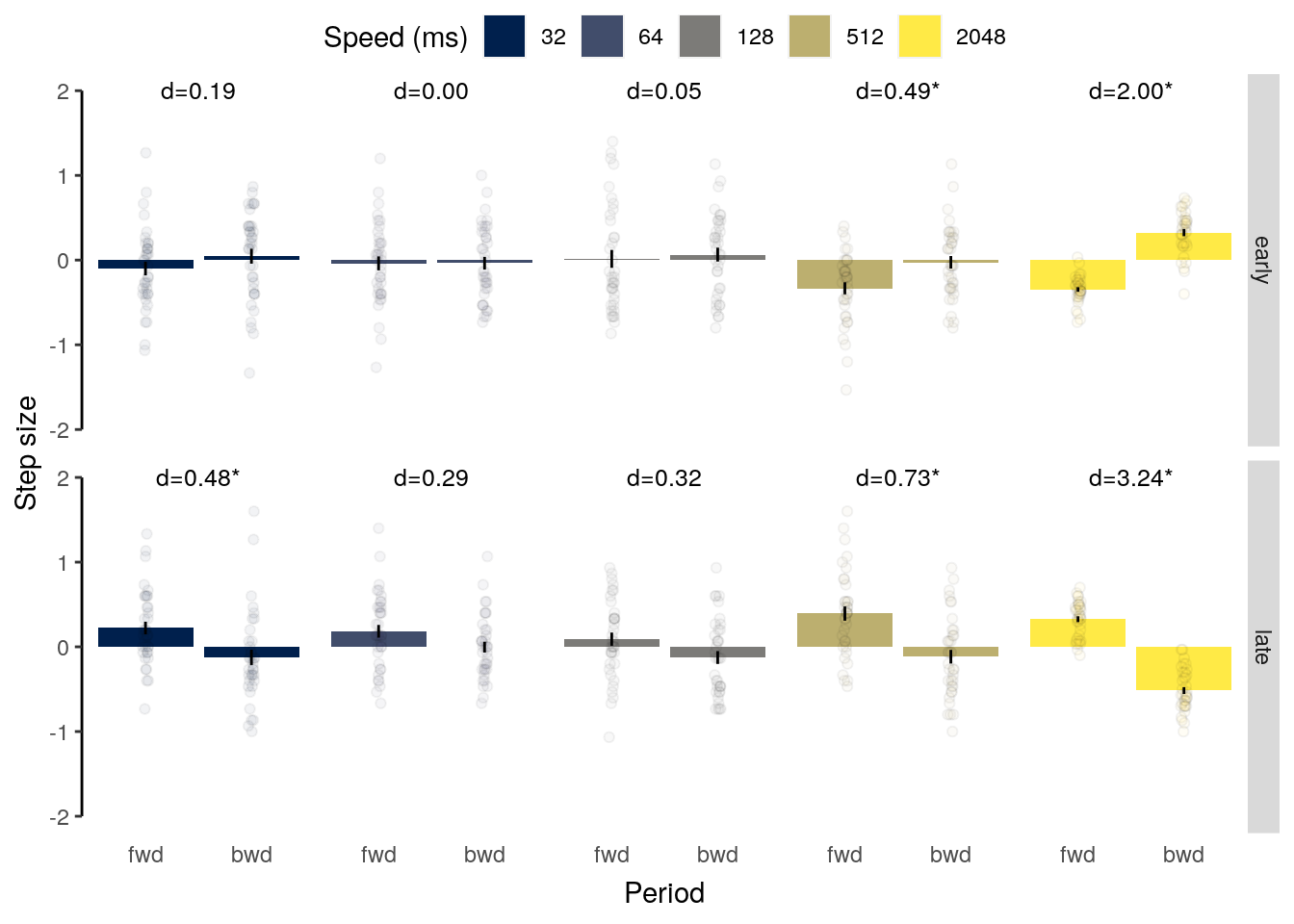

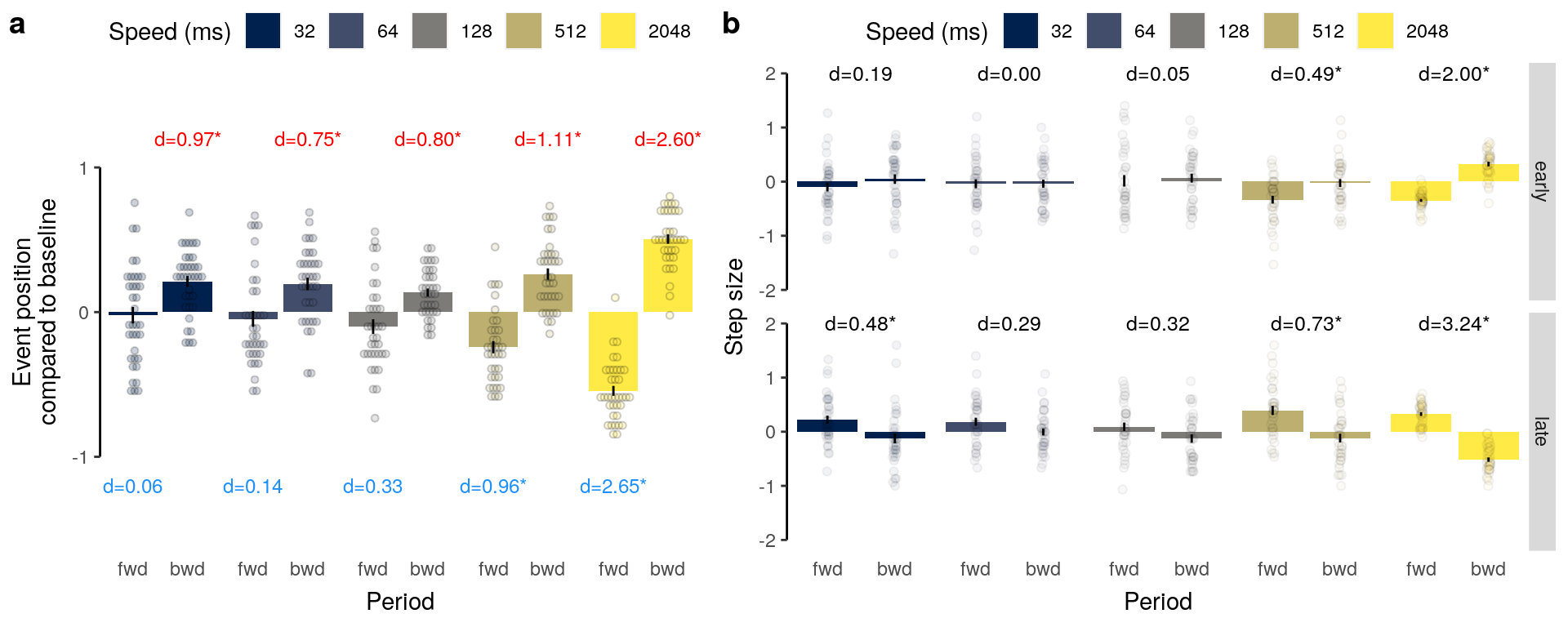

rmarkdown::paged_table(dt_rep_behav_chance)2.2.6.2 Figure 1f

We plot the results of the forward and backward interference conditions:

plot_data = dt_rep_behav_chance %>% filter(trial_target_position %in% c(2,9))

fig_behav_rep = ggplot(data = plot_data, mapping = aes(

y = as.numeric(mean_accuracy), x = fct_rev(as.factor(interference)),

fill = as.factor(interference))) +

geom_bar(stat = "summary", fun = "mean") +

geom_dotplot(data = dt_rep_behav %>%

filter(!(subject %in% subjects_excluded)) %>%

filter(trial_target_position %in% c(2,9)) %>% setDT(.) %>%

.[, by = .(subject, interference), .(

mean_accuracy = mean(mean_accuracy)

)],

binaxis = "y", stackdir = "center", stackratio = 0.5,

color = "black", alpha = 0.5, inherit.aes = TRUE, binwidth = 2) +

geom_errorbar(aes(ymin = sem_lower, ymax = sem_upper), width = 0.0, color = "black") +

ylab("Accuracy (%)") + xlab("Interfererence") +

ggtitle("Repetition") +

theme(plot.title = element_text(hjust = 0.5)) +

scale_fill_manual(values = c("red", "dodgerblue"), guide = FALSE) +

coord_capped_cart(left = "both", bottom = "both", expand = TRUE, ylim = c(0,100)) +

theme(axis.ticks.x = element_blank(), axis.line.x = element_blank()) +

theme(plot.title = element_text(size = 12, face = "plain")) +

#scale_y_continuous(labels = label_fill(seq(0, 100, 12.5), mod = 2), breaks = seq(0, 100, 12.5)) +

geom_hline(aes(yintercept = 50), linetype = "dashed", color = "black") +

#geom_text(aes(y = as.numeric(mean_accuracy) + 10, label = pvalue_adjust_round), size = 3) +

theme(axis.text = element_text(color = "black")) +

theme(axis.ticks.x = element_line(color = "white")) +

theme(axis.line.x = element_line(color = "white")) +

theme(axis.ticks.y = element_line(color = "black")) +

theme(axis.line.y = element_line(colour = "black"),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

fig_behav_rep

2.2.6.3 Source Data File Fig. 1f

dt_rep_behav %>%

select(-num_trials) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_1f.csv"),

row.names = FALSE)2.2.6.4 Figure S1e

We plot the results of all intermediate repetition conditions:

plot_data = dt_rep_behav_chance %>% filter(trial_target_position %in% seq(2,9))

plot_behav_rep_all = ggplot(data = plot_data, mapping = aes(

y = as.numeric(mean_accuracy), x = as.numeric(trial_target_position),

fill = as.numeric(trial_target_position))) +

geom_bar(stat = "identity") +

geom_dotplot(data = dt_rep_behav %>%

filter(!(subject %in% subjects_excluded)) %>%

filter(trial_target_position %in% seq(2,9)) %>% setDT(.) %>%

.[, by = .(subject, trial_target_position), .(

mean_accuracy = mean(mean_accuracy)

)],

aes(x = as.numeric(trial_target_position),

fill = as.numeric(trial_target_position),

group = as.factor(trial_target_position)),

binaxis = "y", stackdir = "center", stackratio = 0.5,

inherit.aes = TRUE, binwidth = 2, color = "black", alpha = 0.5) +

geom_errorbar(aes(ymin = sem_lower, ymax = sem_upper), width = 0.0, color = "black") +

geom_text(aes(y = as.numeric(mean_accuracy) + 7, label = cohens_d), size = 2.5) +

ylab("Accuracy (%)") + xlab("First / second item repetitions") +

scale_fill_gradient(low = "dodgerblue", high = "red", guide = FALSE) +

coord_capped_cart(left = "both", bottom = "both", expand = TRUE, ylim = c(0,100)) +

scale_x_continuous(labels = plot_data$label, breaks = seq(2, 9, 1)) +

geom_hline(aes(yintercept = 50), linetype = "dashed", color = "black") +

theme(axis.ticks.x = element_blank(), axis.line.x = element_blank()) +

theme(axis.text = element_text(color = "black")) +

theme(axis.ticks.x = element_line(color = "white")) +

theme(axis.line.x = element_line(color = "white")) +

theme(axis.ticks.y = element_line(color = "black")) +

theme(axis.line.y = element_line(colour = "black"),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

plot_behav_rep_all

2.2.6.5 Source Data File Fig. S1e

dt_rep_behav %>%

select(-num_trials) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_s1e.csv"),

row.names = FALSE)ggsave(filename = "highspeed_plot_behavior_repetition_supplement.pdf",

plot = last_plot(), device = cairo_pdf, path = path_figures,

scale = 1, dpi = "retina", width = 5, height = 3, units = "in")We run a LME model to test the effect of repetition condition on mean behavioral accuracy in repetition trials:

lme_rep_behav_condition = lmer(

mean_accuracy ~ trial_target_position + (1 + trial_target_position|subject),

data = dt_rep_behav, na.action = na.omit, control = lcctrl)

summary(lme_rep_behav_condition)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: mean_accuracy ~ trial_target_position + (1 + trial_target_position |

## subject)

## Data: dt_rep_behav

## Control: lcctrl

##

## REML criterion at convergence: 3287.6

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.3290 -0.6353 0.1102 0.7484 1.9475

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## subject (Intercept) 59.90 7.739

## trial_target_position 1.35 1.162 -0.02

## Residual 468.95 21.655

## Number of obs: 360, groups: subject, 40

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 87.7619 2.5538 39.0000 34.365 < 2e-16 ***

## trial_target_position -2.5976 0.3428 39.0000 -7.578 3.49e-09 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## trl_trgt_ps -0.643anova(lme_rep_behav_condition)## Type III Analysis of Variance Table with Satterthwaite's method

## Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

## trial_target_position 26930 26930 1 39 57.426 3.493e-09 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 12.2.7 Figure Main

We plot the figure for the main text:

2.2.8 Figure SI

We plot the figure for the supplementary information:

ggsave(filename = "wittkuhn_schuck_figure_s1.pdf",

plot = last_plot(), device = cairo_pdf, path = path_figures, scale = 1,

dpi = "retina", width = 8, height = 5)2.3 Participants

We analyze characteristics of the participants:

# read data table with participant information:

dt_participants <- do.call(rbind, lapply(Sys.glob(path_participants), fread))

# remove selected participants from the data table:

dt_participants = dt_participants %>%

filter(!(participant_id %in% subjects_excluded)) %>%

setDT(.)

base::table(dt_participants$sex)

round(sd(dt_participants$age), digits = 2)

base::summary(

dt_participants[, c("age", "digit_span", "session_interval"), with = FALSE])

round(sd(dt_participants$session_interval), digits = 2)##

## f m

## 20 16

## [1] 3.77

## age digit_span session_interval

## Min. :20.00 Min. : 9.00 Min. : 1.000

## 1st Qu.:22.00 1st Qu.:15.00 1st Qu.: 4.000

## Median :24.00 Median :16.00 Median : 7.500

## Mean :24.61 Mean :16.75 Mean : 9.167

## 3rd Qu.:26.00 3rd Qu.:19.00 3rd Qu.:13.250

## Max. :35.00 Max. :24.00 Max. :24.000

## [1] 6.142.4 Decoding on slow trials

2.4.1 Initialization

We load the data and relevant functions:

# find the path to the root of this project:

if (!requireNamespace("here")) install.packages("here")

if ( basename(here::here()) == "highspeed" ) {

path_root = here::here("highspeed-analysis")

} else {

path_root = here::here()

}

# create a list of participants to exclude based on behavioral performance:

sub_exclude <- c("sub-24", "sub-31", "sub-37", "sub-40")The next step is only evaluated during manual execution:

# source all relevant functions from the setup R script:

source(file.path(path_root, "code", "highspeed-analysis-setup.R"))2.4.2 Decoding accuracy

2.4.2.1 Mean decoding accuracy

We calculate the mean decoding accuracy:

calc_class_stim_acc <- function(data, mask_label) {

# calculate the mean decoding accuracy for each participant:

dt_odd_peak = data %>%

# select data for the oddball peak decoding

# leave out the redundant "other" class predictions:

filter(test_set == "test-odd_peak" & class != "other" & mask == mask_label) %>%

# filter out excluded participants:

filter(!(id %in% sub_exclude)) %>%

# only check classifier of stimulus presented on a given trial:

filter(class == stim) %>%

# calculate the decoding accuracy by comparing true and predicted label:

mutate(acc = 0) %>%

transform(acc = ifelse(pred_label == stim, 1, acc)) %>%

setDT(.) %>%

# calculate average decoding accuracy for every participant and classification:

.[, by = .(classification, id), .(

mean_accuracy = mean(acc) * 100,

num_trials = .N

)] %>%

verify(all(num_trials <= 480)) %>%

# verify if the number of participants matches:

verify(.[classification == "ovr", .(num_subs = .N)]$num_subs == 36)

return(dt_odd_peak)

}calc_max_prob_acc <- function(data, mask_label) {

dt_odd_peak <- data %>%

# select data for the oddball peak decoding

# leave out the redundant "other" class predictions:

filter(test_set == "test-odd_peak" & class != "other" & mask == mask_label) %>%

# filter out excluded participants:

filter(!(id %in% sub_exclude)) %>%

# calculate the decoding accuracy by comparing true and predicted label:

setDT(.) %>%

# calculate average decoding accuracy for every participant and classification:

.[, by = .(classification, id, trial, stim), .(

max_prob_label = class[which.max(probability)],

num_classes = .N

)] %>%

verify(num_classes == 5) %>%

.[, "acc" := ifelse(stim == max_prob_label, 1, 0)] %>%

.[, by = .(classification, id), .(

mean_accuracy = mean(acc) * 100,

num_trials = .N

)] %>%

verify(num_trials <= 480)

return(dt_odd_peak)

}We print the results for the decoding accuracy:

decoding_accuracy <- function(accuracy, alt_label){

# print average decoding accuracy:

print(sprintf("decoding accuracy: m = %0.2f %%", mean(accuracy)))

# print sd decoding accuracy:

print(sprintf("decoding accuracy: sd = %0.2f %%", sd(accuracy)))

# print range of decoding accuracy:

print(sprintf("decoding accuracy: range = %s %%",

paste(round(range(accuracy),0), collapse = "-")))

# define decoding accuracy baseline (should equal 20%):

acc_baseline = 100/5

print(sprintf("chance baseline = %d %%", acc_baseline))

# perform effect size (cohen`s d)

cohens_d = (mean(accuracy) - acc_baseline) / sd(accuracy)

print(sprintf("effect size (cohen's d) = %0.2f", cohens_d))

# perform shapiro-wilk test to test for normality of the data

print(shapiro.test(accuracy))

# perform one-sided one-sample t-test against baseline:

results = t.test(accuracy, mu = acc_baseline, alternative = alt_label)

print(results)

# perform one-sample wilcoxon test in case of non-normality of the data:

print(wilcox.test(accuracy, mu = acc_baseline, alternative = alt_label))

}#dt_odd_peak <- calc_class_stim_acc(data = dt_pred, mask_label = "cv")

#dt_odd_peak_hpc <- calc_class_stim_acc(data = dt_pred, mask_label = "cv_hpc")

dt_odd_peak <- calc_max_prob_acc(data = dt_pred, mask_label = "cv")

dt_odd_peak_hpc <- calc_max_prob_acc(data = dt_pred, mask_label = "cv_hpc")

decoding_accuracy(

accuracy = subset(dt_odd_peak, classification == "ovr")$mean_acc,

alt_label = "greater")

decoding_accuracy(

accuracy = subset(dt_odd_peak_hpc, classification == "ovr")$mean_accuracy,

alt_label = "two.sided")## [1] "decoding accuracy: m = 69.22 %"

## [1] "decoding accuracy: sd = 11.18 %"

## [1] "decoding accuracy: range = 38-89 %"

## [1] "chance baseline = 20 %"

## [1] "effect size (cohen's d) = 4.40"

##

## Shapiro-Wilk normality test

##

## data: accuracy

## W = 0.94786, p-value = 0.08953

##

##

## One Sample t-test

##

## data: accuracy

## t = 26.409, df = 35, p-value < 2.2e-16

## alternative hypothesis: true mean is greater than 20

## 95 percent confidence interval:

## 66.07499 Inf

## sample estimates:

## mean of x

## 69.22417

##

##

## Wilcoxon signed rank exact test

##

## data: accuracy

## V = 666, p-value = 1.455e-11

## alternative hypothesis: true location is greater than 20

##

## [1] "decoding accuracy: m = 20.52 %"

## [1] "decoding accuracy: sd = 1.49 %"

## [1] "decoding accuracy: range = 17-24 %"

## [1] "chance baseline = 20 %"

## [1] "effect size (cohen's d) = 0.35"

##

## Shapiro-Wilk normality test

##

## data: accuracy

## W = 0.94782, p-value = 0.08928

##

##

## One Sample t-test

##

## data: accuracy

## t = 2.1007, df = 35, p-value = 0.04294

## alternative hypothesis: true mean is not equal to 20

## 95 percent confidence interval:

## 20.01755 21.02752

## sample estimates:

## mean of x

## 20.52254

##

##

## Wilcoxon signed rank test with continuity correction

##

## data: accuracy

## V = 440, p-value = 0.09424

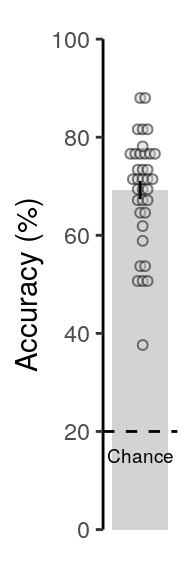

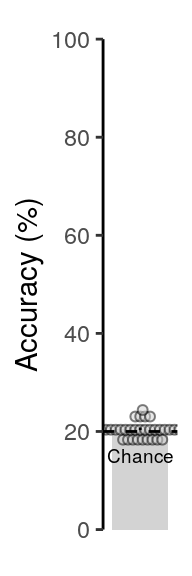

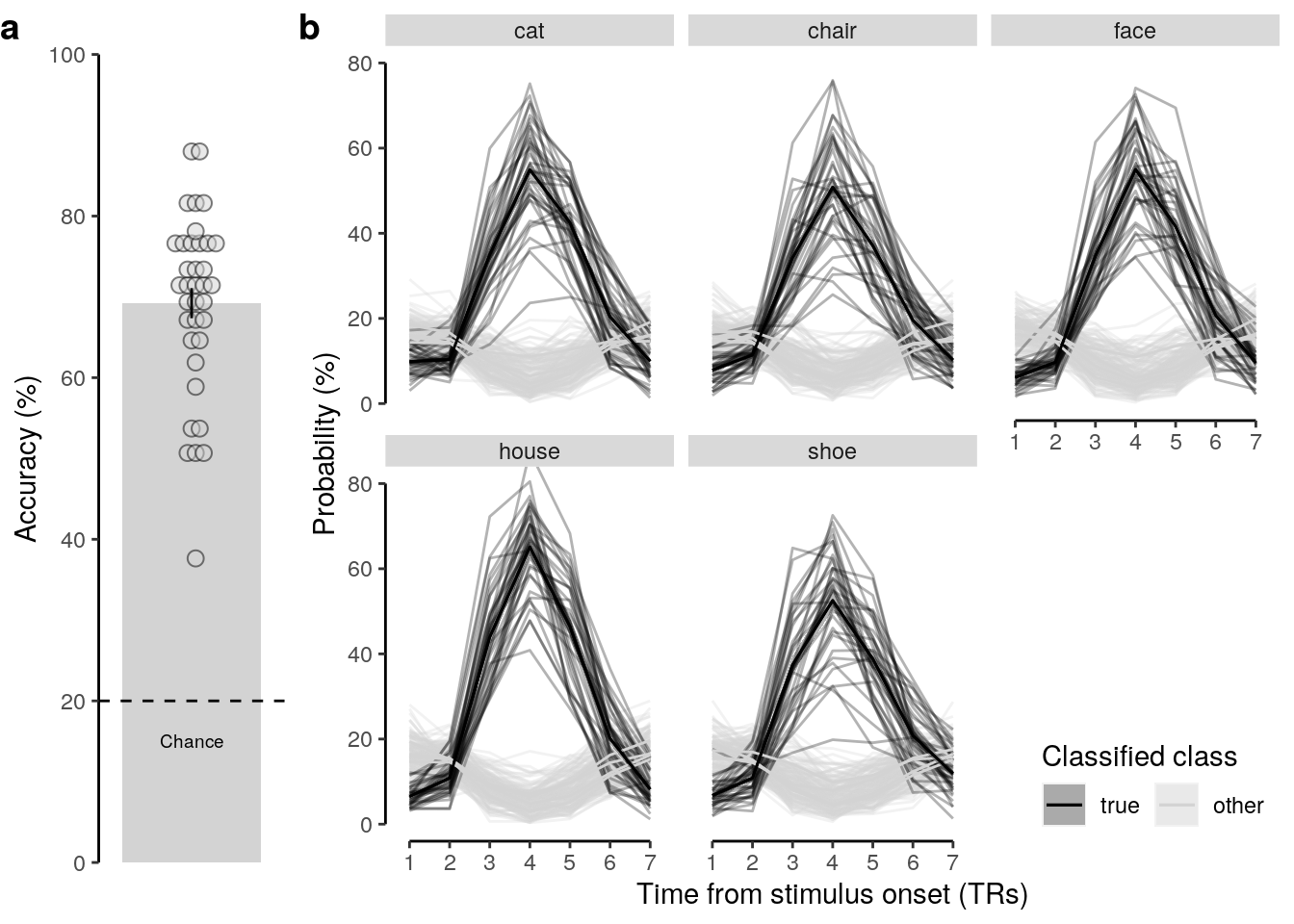

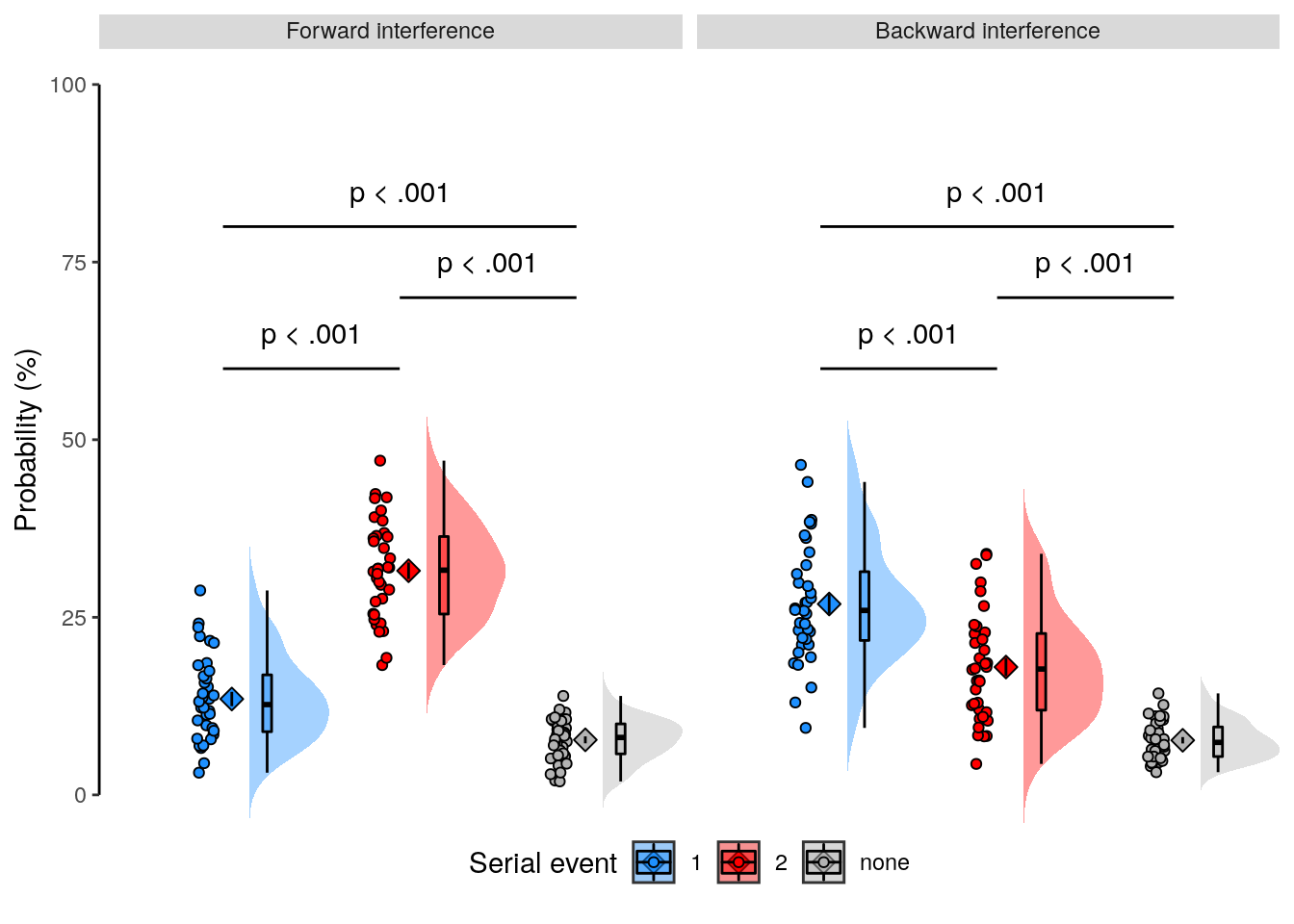

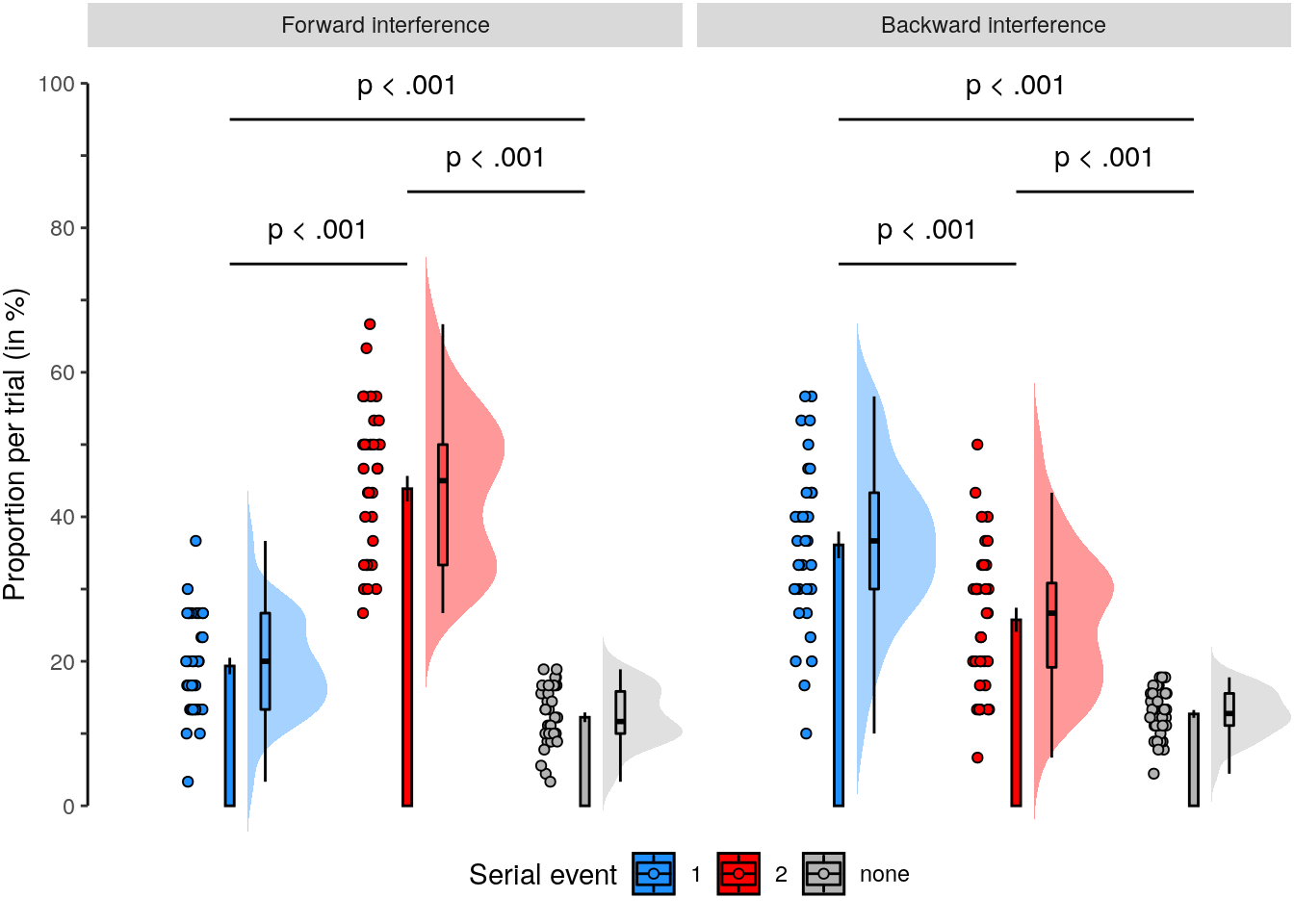

## alternative hypothesis: true location is not equal to 202.4.2.2 Figure 2a / S2a

We plot the mean decoding accuracy in occipito-temporal and hippocampal data:

plot_odd_peak <- function(data) {

plot.odd = ggplot(data = data, aes(

x = "mean_acc", y = as.numeric(mean_accuracy))) +

geom_bar(stat = "summary", fun = "mean", fill = "lightgray") +

geom_dotplot(binaxis = "y", stackdir = "center", stackratio = 0.5,

color = "black", fill = "lightgray", alpha = 0.5,

inherit.aes = TRUE, binwidth = 2) +

geom_errorbar(stat = "summary", fun.data = "mean_se", width = 0.0, color = "black") +

ylab("Accuracy (%)") + xlab("Condition") +

geom_hline(aes(yintercept = 20), linetype = "dashed", color = "black") +

scale_y_continuous(labels = label_fill(seq(0, 100, 20), mod = 1),

breaks = seq(0, 100, 20)) +

coord_capped_cart(left = "both", expand = TRUE, ylim = c(0, 100)) +

theme(axis.ticks.x = element_blank(), axis.line.x = element_blank()) +

theme(axis.title.x = element_blank(), axis.text.x = element_blank()) +

annotate("text", x = 1, y = 15, label = "Chance", color = "black",

size = rel(2.5), family = "Helvetica", fontface = "plain") +

theme(panel.border = element_blank(), axis.line = element_line()) +

theme(axis.line = element_line(colour = "black"),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank())

return(plot.odd)

}

fig_odd_peak <- plot_odd_peak(subset(dt_odd_peak, classification == "ovr"))

fig_odd_peak_hpc <- plot_odd_peak(subset(dt_odd_peak_hpc, classification == "ovr"))

fig_odd_peak; fig_odd_peak_hpc

2.4.2.3 Source Data File Fig. 2a

subset(dt_odd_peak, classification == "ovr") %>%

select(-num_trials, -classification) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_2a.csv"),

row.names = FALSE)2.4.2.4 Source Data File Fig. S2a

subset(dt_odd_peak_hpc, classification == "ovr") %>%

select(-num_trials, -classification) %>%

write.csv(., file = file.path(path_sourcedata, "source_data_figure_s2a.csv"),

row.names = FALSE)2.4.3 Fold-wise decoding accuracy

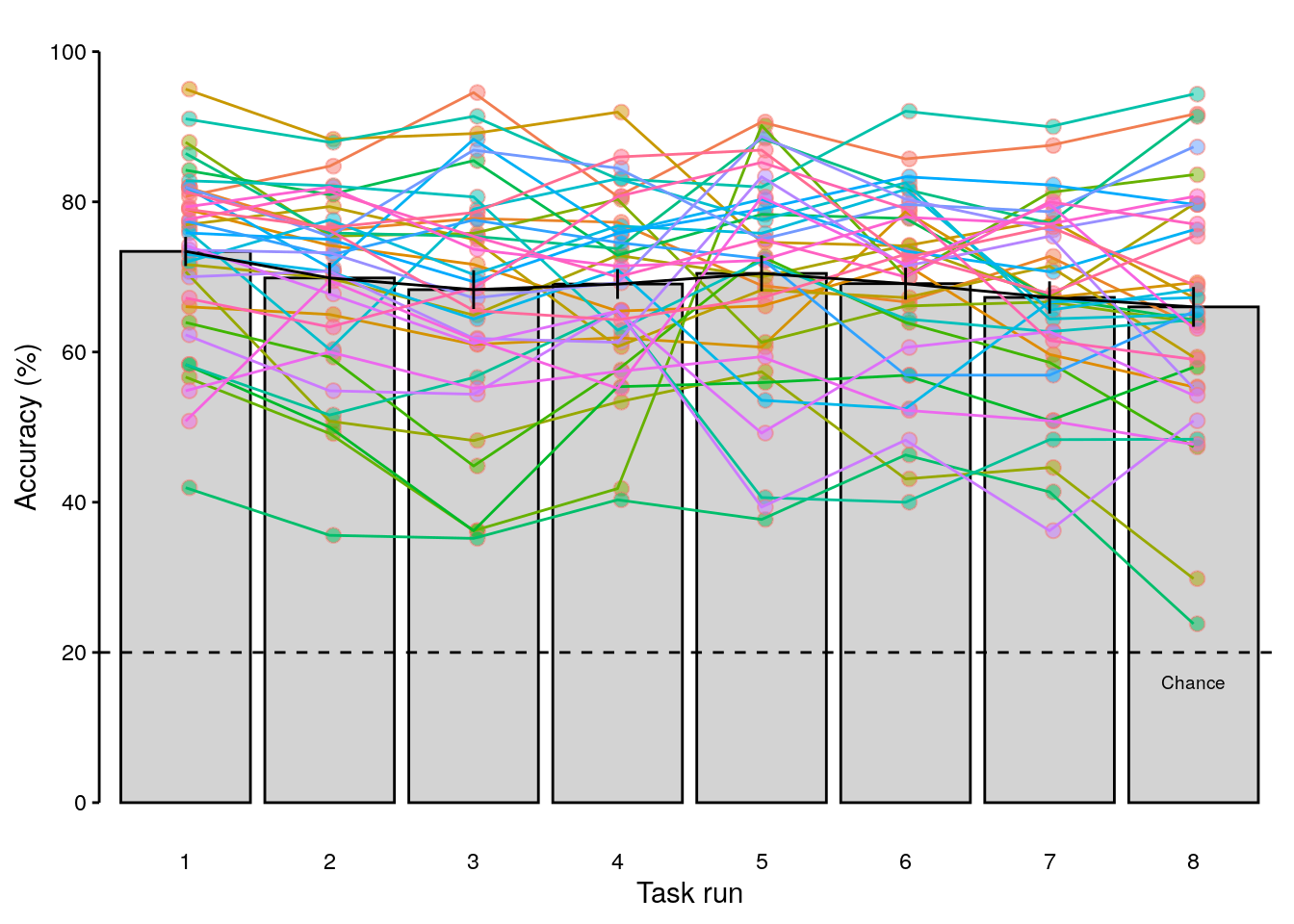

We calculate the mean decoding accuracy for each of the eight folds of the cross-validation procedure:

dt_odd_peak_run = dt_pred %>%

# select data for the oddball peak decoding

# leave out the redundant "other" class predictions:

filter(test_set == "test-odd_peak" & class != "other" & mask == "cv") %>%

# filter out excluded participants:

filter(!(id %in% sub_exclude)) %>%

setDT(.) %>%

# calculate average decoding accuracy for every participant and classification:

.[, by = .(classification, id, run_study, trial, stim), .(

max_prob_label = class[which.max(probability)],

num_classes = .N

)] %>%

# check if there are five classifier predictions per trial:

verify(num_classes == 5) %>%

# determine accuracy if label with highest probability matches stimulus:

.[, "accuracy" := ifelse(stim == max_prob_label, 1, 0)]

# print results table:

rmarkdown::paged_table(dt_odd_peak_run)# calculate average decoding accuracy for every participant and classification:

dt_odd_peak_run_mean <- dt_odd_peak_run %>%

.[, by = .(classification, id, run_study), .(

mean_accuracy = mean(accuracy) * 100,

num_trials = length(unique(trial))

)] %>%

# verify if the number of participants matches for every classification and run:

verify(.[, by = .(classification, run_study),

.(num_subs = .N)]$num_subs == 36) %>%

setorder(., classification, id, run_study)

# print the table:

rmarkdown::paged_table(dt_odd_peak_run_mean)

lme_odd_peak_run <- lmerTest::lmer(

mean_accuracy ~ run_study + (1 | id), control = lcctrl,

data = subset(dt_odd_peak_run_mean, classification == "ovr")

)

summary(lme_odd_peak_run)

anova(lme_odd_peak_run)

emmeans_results = emmeans(lme_odd_peak_run, list(pairwise ~ run_study))

emmeans_results## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: mean_accuracy ~ run_study + (1 | id)

## Data: subset(dt_odd_peak_run_mean, classification == "ovr")

## Control: lcctrl

##

## REML criterion at convergence: 2081.6

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.3758 -0.6237 0.0020 0.6078 3.1304

##

## Random effects:

## Groups Name Variance Std.Dev.

## id (Intercept) 117.30 10.830

## Residual 63.38 7.961

## Number of obs: 288, groups: id, 36

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 73.400 2.240 70.879 32.764 < 2e-16 ***

## run_study2 -3.538 1.876 245.000 -1.885 0.060551 .

## run_study3 -5.096 1.876 245.000 -2.716 0.007079 **

## run_study4 -4.348 1.876 245.000 -2.317 0.021318 *

## run_study5 -2.934 1.876 245.000 -1.564 0.119200

## run_study6 -4.294 1.876 245.000 -2.288 0.022970 *

## run_study7 -6.131 1.876 245.000 -3.268 0.001240 **

## run_study8 -7.391 1.876 245.000 -3.939 0.000107 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr) rn_st2 rn_st3 rn_st4 rn_st5 rn_st6 rn_st7

## run_study2 -0.419

## run_study3 -0.419 0.500

## run_study4 -0.419 0.500 0.500

## run_study5 -0.419 0.500 0.500 0.500

## run_study6 -0.419 0.500 0.500 0.500 0.500

## run_study7 -0.419 0.500 0.500 0.500 0.500 0.500

## run_study8 -0.419 0.500 0.500 0.500 0.500 0.500 0.500

## Type III Analysis of Variance Table with Satterthwaite's method

## Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

## run_study 1239.3 177.04 7 245 2.7936 0.008175 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

## $`emmeans of run_study`

## run_study emmean SE df lower.CL upper.CL

## 1 73.4 2.24 70.9 68.9 77.9

## 2 69.9 2.24 70.9 65.4 74.3

## 3 68.3 2.24 70.9 63.8 72.8

## 4 69.1 2.24 70.9 64.6 73.5

## 5 70.5 2.24 70.9 66.0 74.9

## 6 69.1 2.24 70.9 64.6 73.6

## 7 67.3 2.24 70.9 62.8 71.7

## 8 66.0 2.24 70.9 61.5 70.5

##

## Degrees-of-freedom method: kenward-roger

## Confidence level used: 0.95

##

## $`pairwise differences of run_study`

## contrast estimate SE df t.ratio p.value

## 1 - 2 3.5379 1.88 245 1.885 0.5622

## 1 - 3 5.0962 1.88 245 2.716 0.1227

## 1 - 4 4.3480 1.88 245 2.317 0.2885

## 1 - 5 2.9339 1.88 245 1.564 0.7715

## 1 - 6 4.2938 1.88 245 2.288 0.3043

## 1 - 7 6.1312 1.88 245 3.268 0.0268

## 1 - 8 7.3910 1.88 245 3.939 0.0027

## 2 - 3 1.5584 1.88 245 0.831 0.9912

## 2 - 4 0.8101 1.88 245 0.432 0.9999

## 2 - 5 -0.6039 1.88 245 -0.322 1.0000

## 2 - 6 0.7560 1.88 245 0.403 0.9999

## 2 - 7 2.5934 1.88 245 1.382 0.8647

## 2 - 8 3.8532 1.88 245 2.053 0.4482

## 3 - 4 -0.7482 1.88 245 -0.399 0.9999

## 3 - 5 -2.1623 1.88 245 -1.152 0.9442

## 3 - 6 -0.8024 1.88 245 -0.428 0.9999

## 3 - 7 1.0350 1.88 245 0.552 0.9993

## 3 - 8 2.2948 1.88 245 1.223 0.9245

## 4 - 5 -1.4140 1.88 245 -0.754 0.9951

## 4 - 6 -0.0541 1.88 245 -0.029 1.0000

## 4 - 7 1.7832 1.88 245 0.950 0.9806

## 4 - 8 3.0430 1.88 245 1.622 0.7368

## 5 - 6 1.3599 1.88 245 0.725 0.9962

## 5 - 7 3.1973 1.88 245 1.704 0.6847

## 5 - 8 4.4571 1.88 245 2.375 0.2582

## 6 - 7 1.8374 1.88 245 0.979 0.9770

## 6 - 8 3.0972 1.88 245 1.651 0.7189

## 7 - 8 1.2598 1.88 245 0.671 0.9976

##

## Degrees-of-freedom method: kenward-roger

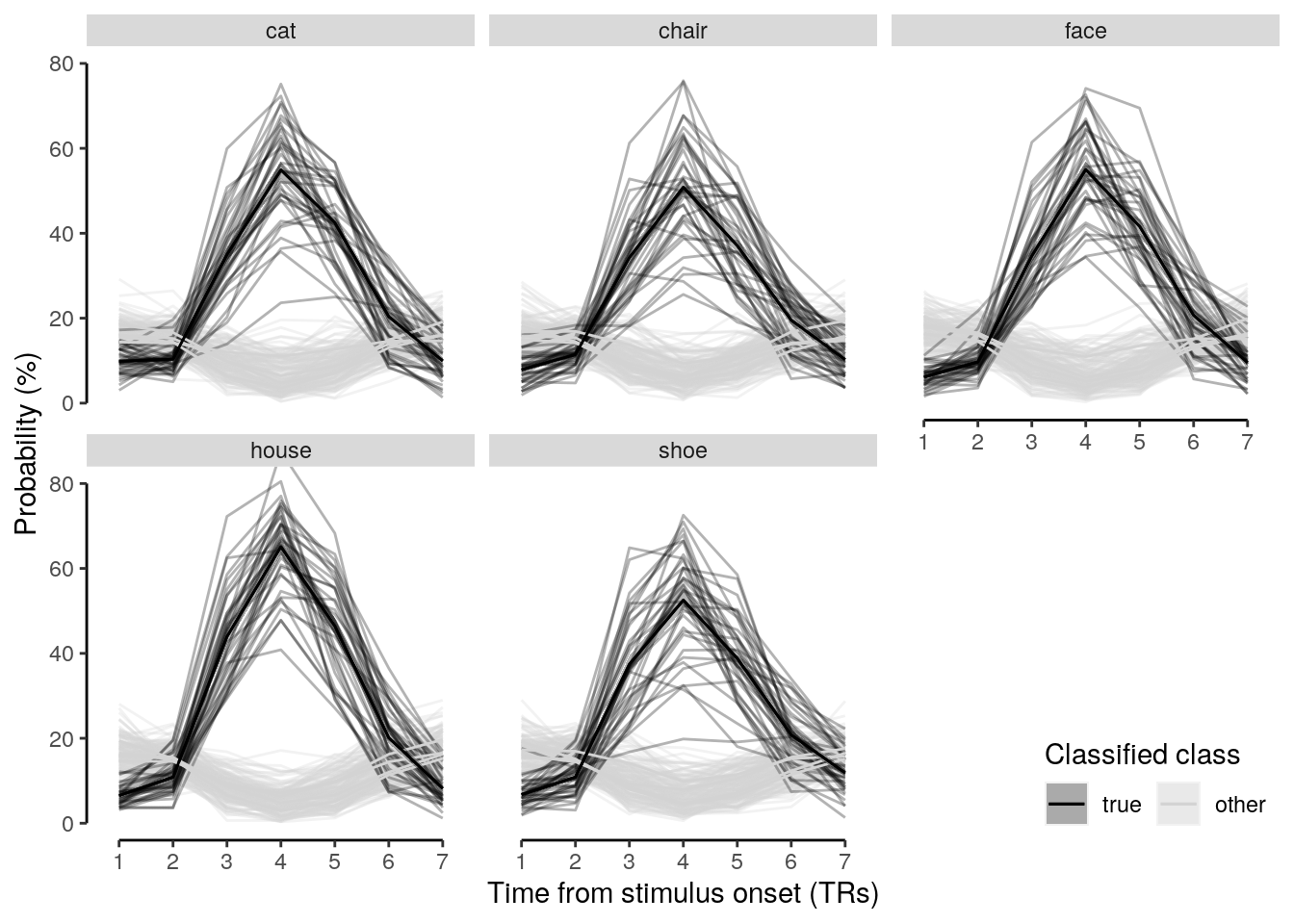

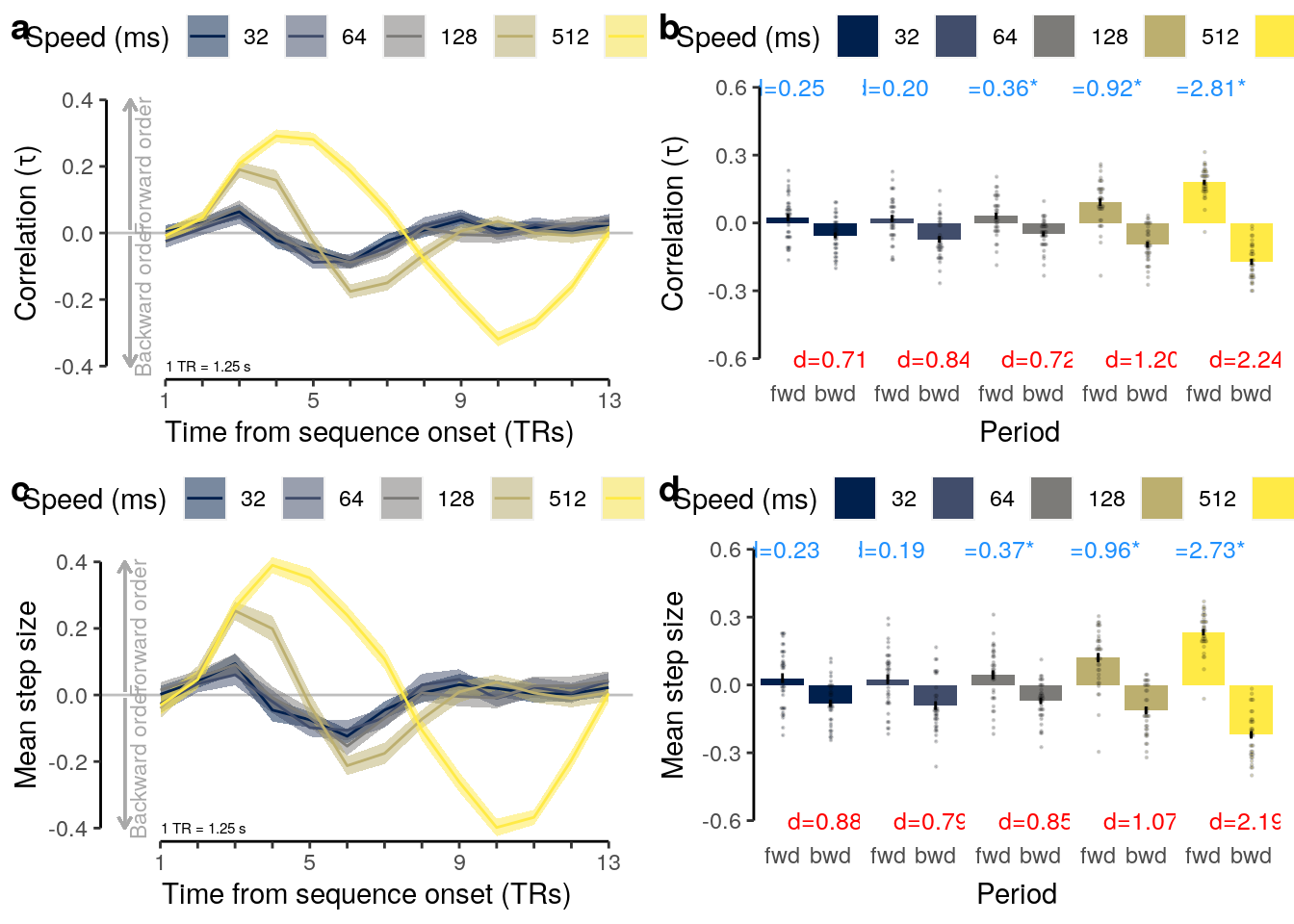

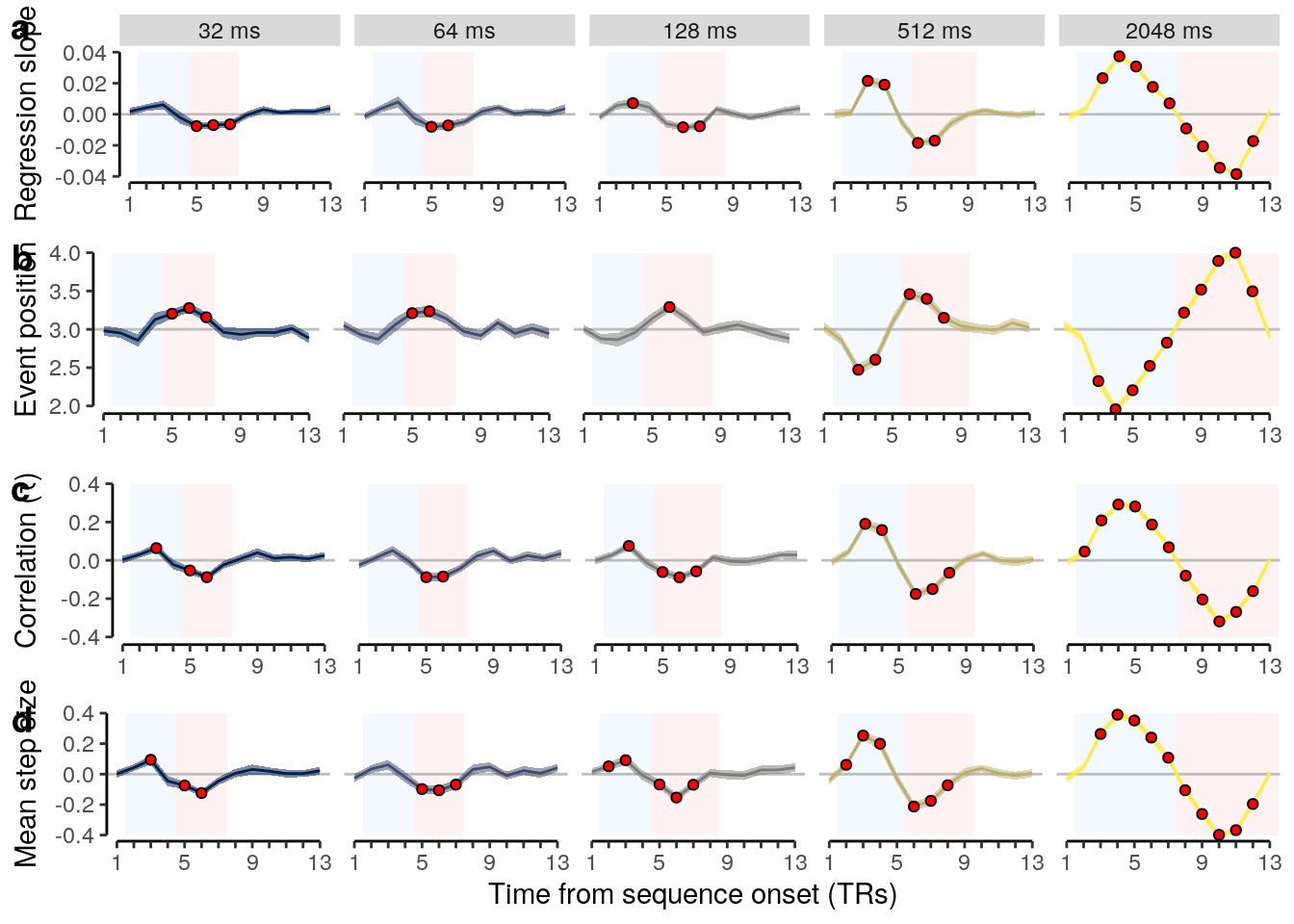

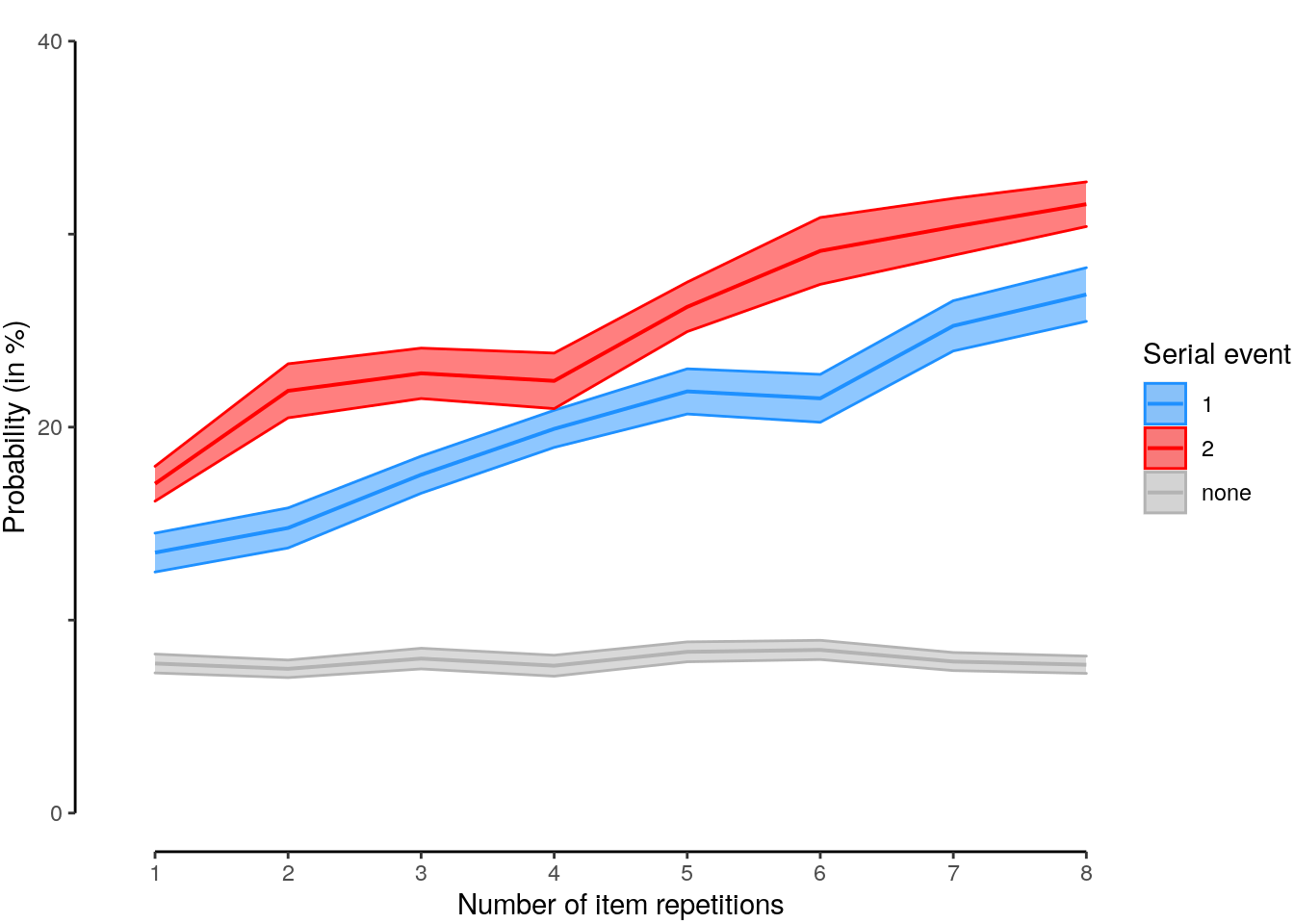

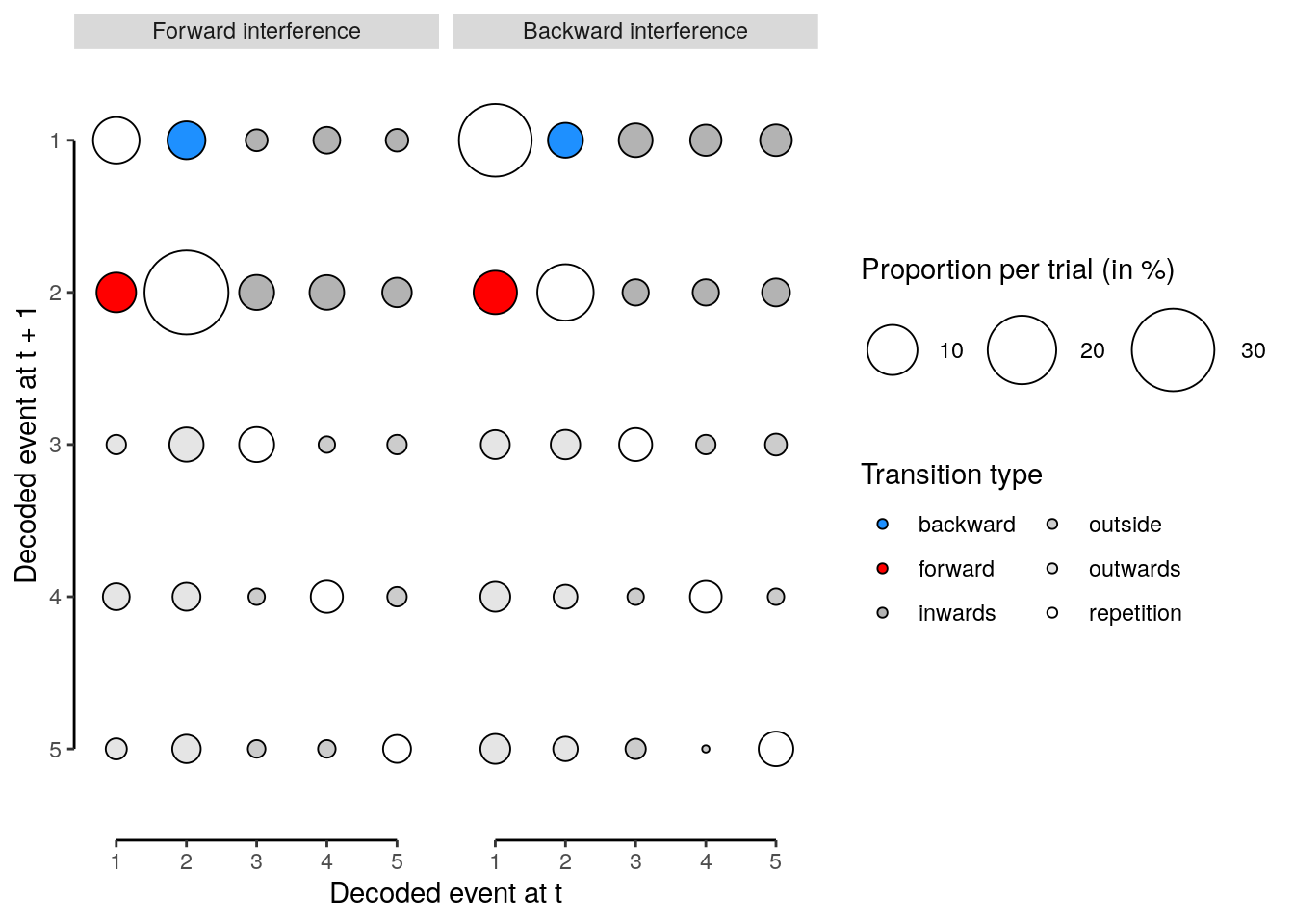

## P value adjustment: tukey method for comparing a family of 8 estimates2.5 Single-trial decoding time courses

We calculate the multivariate decoding time courses on single slow trials:

dt_odd_long_sub = dt_pred %>%

# select data for the oddball long decoding

# leave out the redundant "other" class predictions:

filter(test_set == "test-odd_long" & class != "other" & mask == "cv") %>%

# filter out excluded participants:

filter(!(id %in% sub_exclude)) %>% setDT(.) %>%

# average across stimuli and runs for each participant

# create an index for the true stimulus presented on each trial:

.[, by = .(classification, id, seq_tr, class, stim), .(

num = .N,

mean_prob = mean(probability * 100),

current_stim = as.numeric(class == unique(stim))

)]

dt_odd_long_mean = dt_odd_long_sub %>%

# average across participants:

.[, by = .(classification, seq_tr, class, stim, current_stim), .(

mean_prob = mean(mean_prob),

num_subs = .N,

sem_upper = (mean(mean_prob) + (sd(mean_prob)/sqrt(.N))),

sem_lower = (mean(mean_prob) - (sd(mean_prob)/sqrt(.N)))

)] %>%

# create an additional variable that expresses time in seconds:

mutate(time = (seq_tr - 1) * 1.25) %>%

# check if the number of participants is correct:

verify(all(num_subs == 36))2.5.0.1 Figure 2b

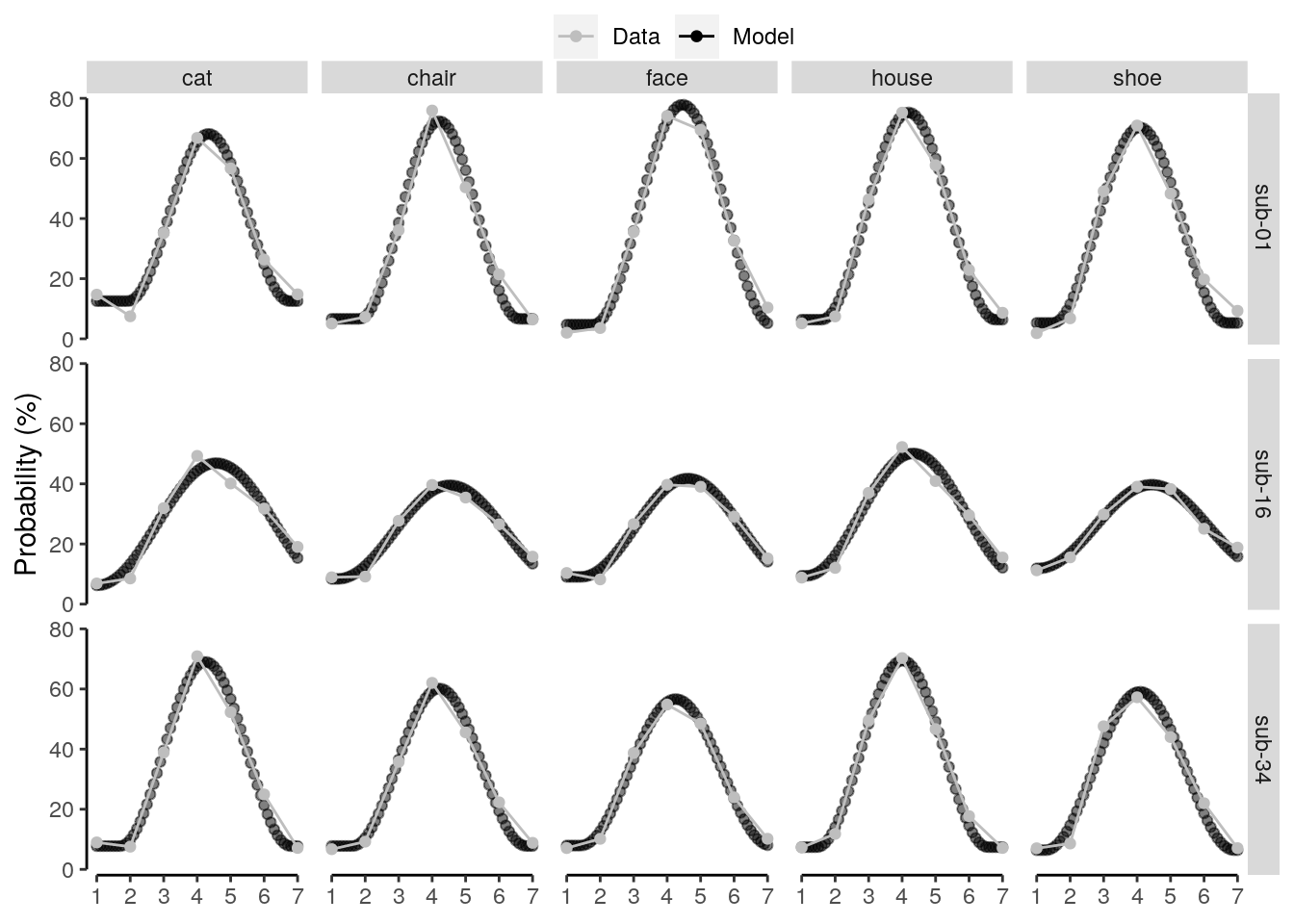

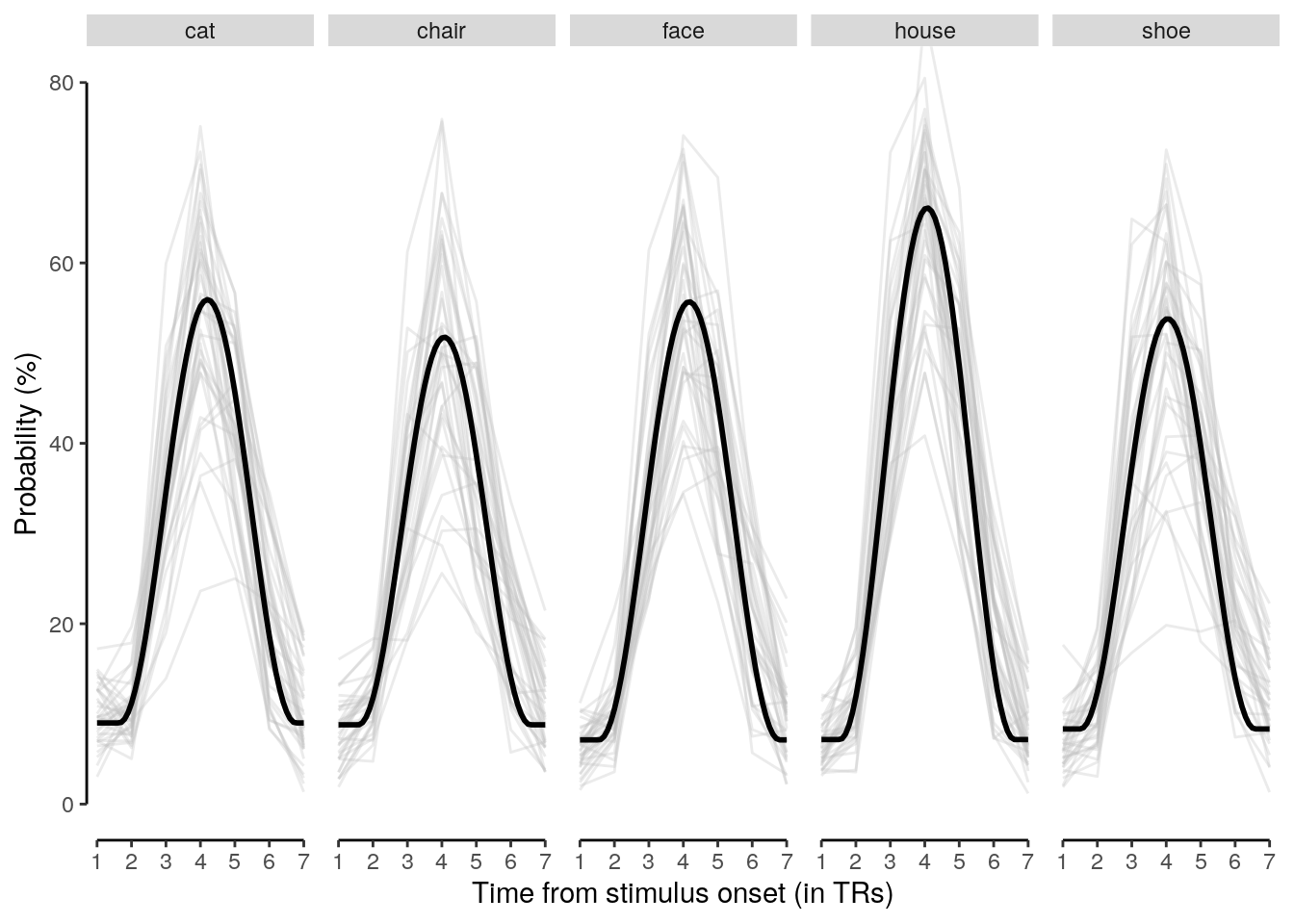

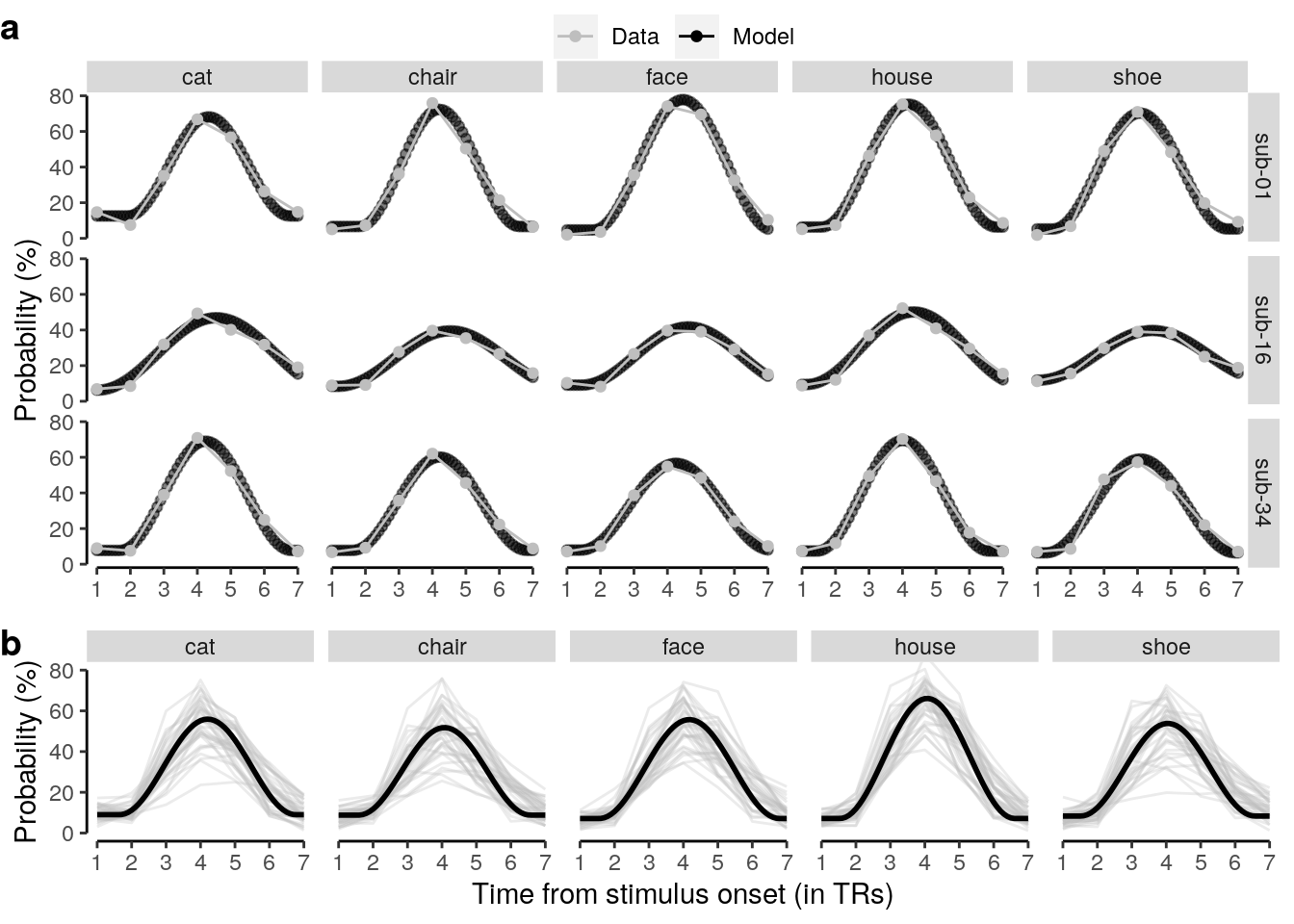

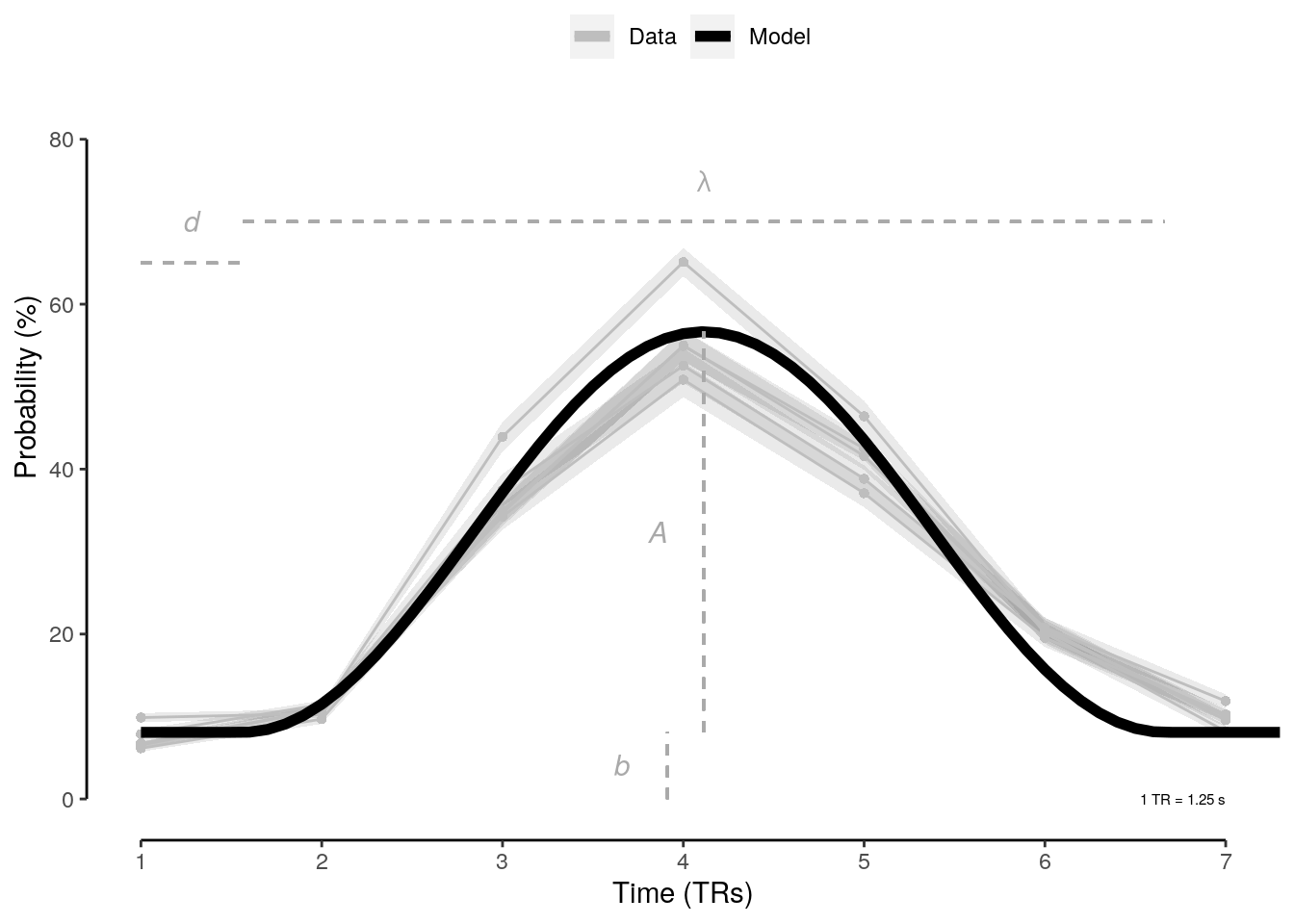

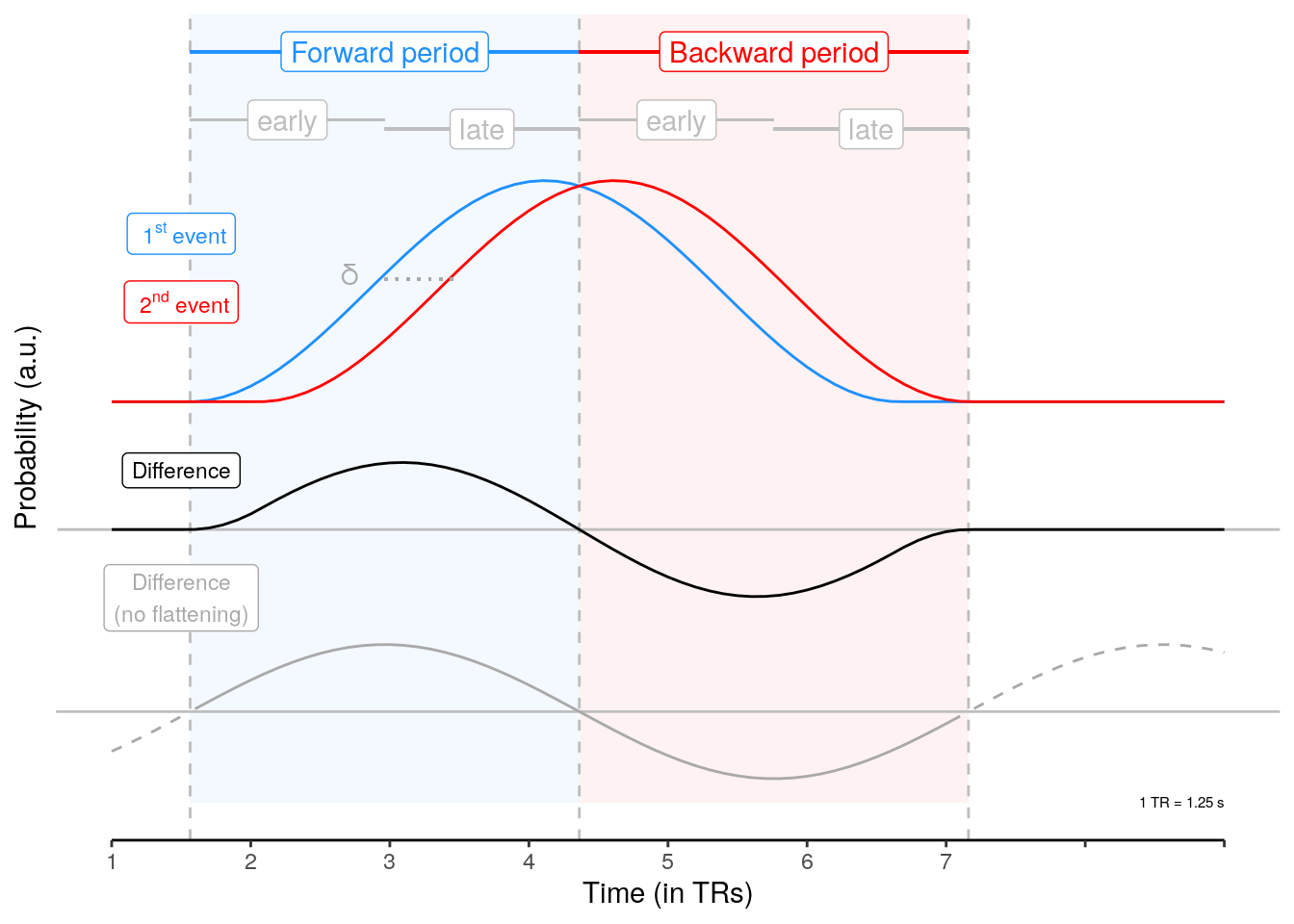

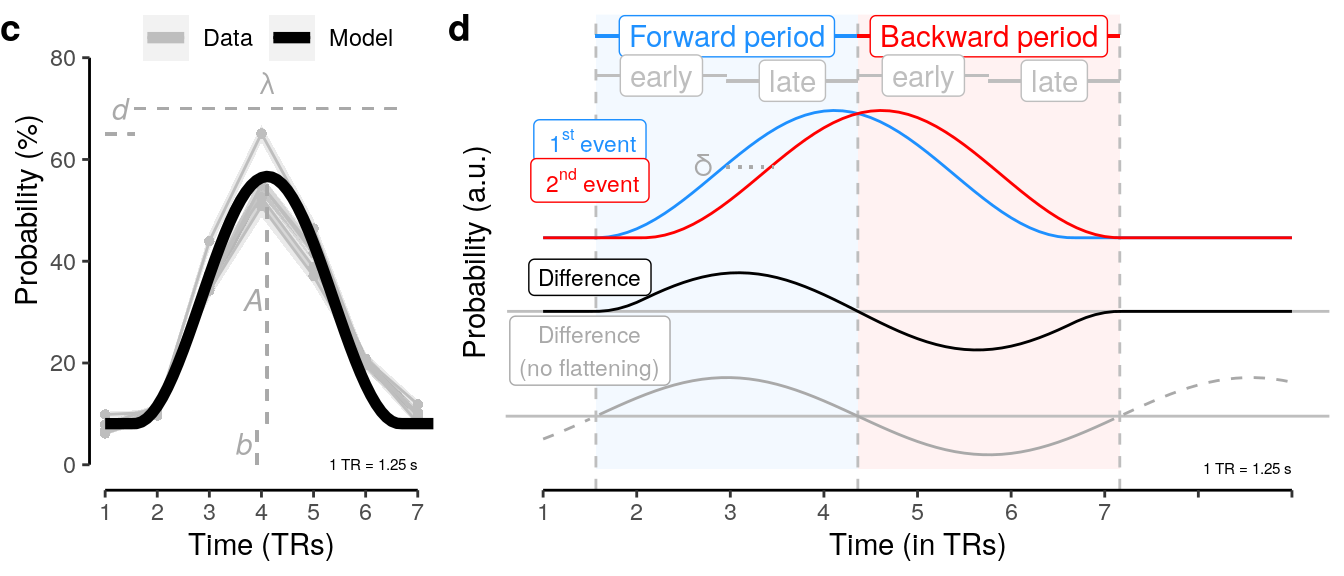

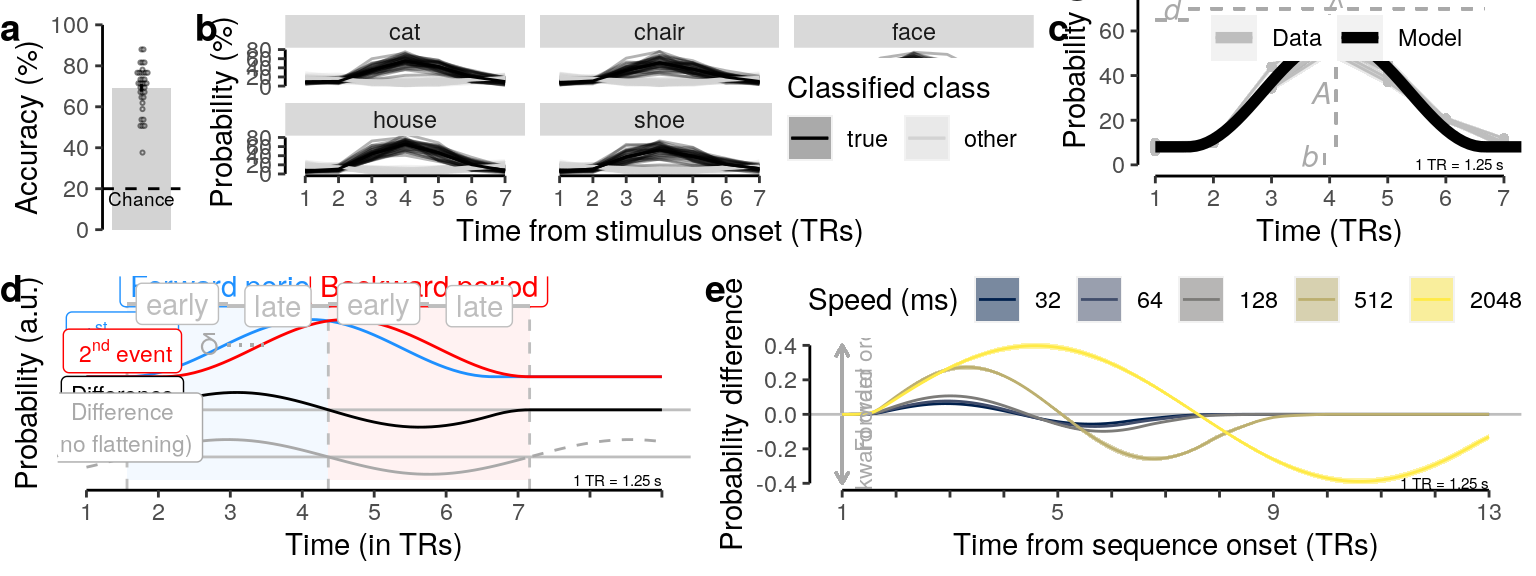

We plot the single-trial multi-variate decoding time courses on slow trials:

plot.odd.long = ggplot(data = subset(dt_odd_long_mean, classification == "ovr"), aes(

x = as.factor(seq_tr), y = as.numeric(mean_prob))) +

facet_wrap(~ as.factor(stim)) +

geom_line(data = subset(dt_odd_long_sub, classification == "ovr"), aes(

group = as.factor(interaction(id, class)), color = fct_rev(as.factor(current_stim))), alpha = 0.0) +

geom_line(data = subset(dt_odd_long_sub, classification == "ovr" & current_stim == 0), aes(

group = as.factor(interaction(id, class)), color = fct_rev(as.factor(current_stim))), alpha = 0.3) +

geom_line(data = subset(dt_odd_long_sub, classification == "ovr" & current_stim == 1), aes(

group = as.factor(interaction(id, class)), color = fct_rev(as.factor(current_stim))), alpha = 0.3) +

geom_ribbon(

data = subset(dt_odd_long_mean, classification == "ovr"),

aes(ymin = sem_lower, ymax = sem_upper, fill = fct_rev(as.factor(current_stim)),

group = as.factor(class)), alpha = 0.0, color = NA) +

geom_line(

data = subset(dt_odd_long_mean, classification == "ovr", alpha = 0),

aes(color = fct_rev(as.factor(current_stim)), group = as.factor(class))) +

geom_ribbon(

data = subset(dt_odd_long_mean, classification == "ovr" & current_stim == 1),

aes(ymin = sem_lower, ymax = sem_upper, fill = fct_rev(as.factor(current_stim)),

group = as.factor(class)), alpha = 0.3, color = NA) +

geom_line(

data = subset(dt_odd_long_mean, classification == "ovr" & current_stim == 1),

aes(color = fct_rev(as.factor(current_stim)), group = as.factor(class))) +

ylab("Probability (%)") + xlab("Time from stimulus onset (TRs)") +

scale_fill_manual(name = "Classified class", values = c("black", "lightgray"), labels = c("true", "other")) +

scale_color_manual(name = "Classified class", values = c("black", "lightgray"), labels = c("true", "other")) +

coord_capped_cart(left = "both", bottom = "both", expand = TRUE, ylim = c(0, 80)) +

theme(legend.position = c(1, 0), legend.justification = c(1, 0)) +

#theme(legend.position = c(0.95, 0.8), legend.justification = c(1, 0)) +

#theme(legend.title = element_text(size = 8), legend.text = element_text(size = 8)) +

#theme(legend.key.size = unit(0.7, "line")) +

scale_x_discrete(labels = label_fill(seq(0, 7, 1), mod = 1), breaks = seq(0, 7, 1)) +

guides(color = guide_legend(ncol = 2), fill = guide_legend(ncol = 2)) +

theme(strip.text.x = element_text(margin = margin(b = 2, t = 2))) +

theme(panel.border = element_blank(), axis.line = element_line()) +

theme(axis.line = element_line(colour = "black"),

panel.grid.major = element_blank(),

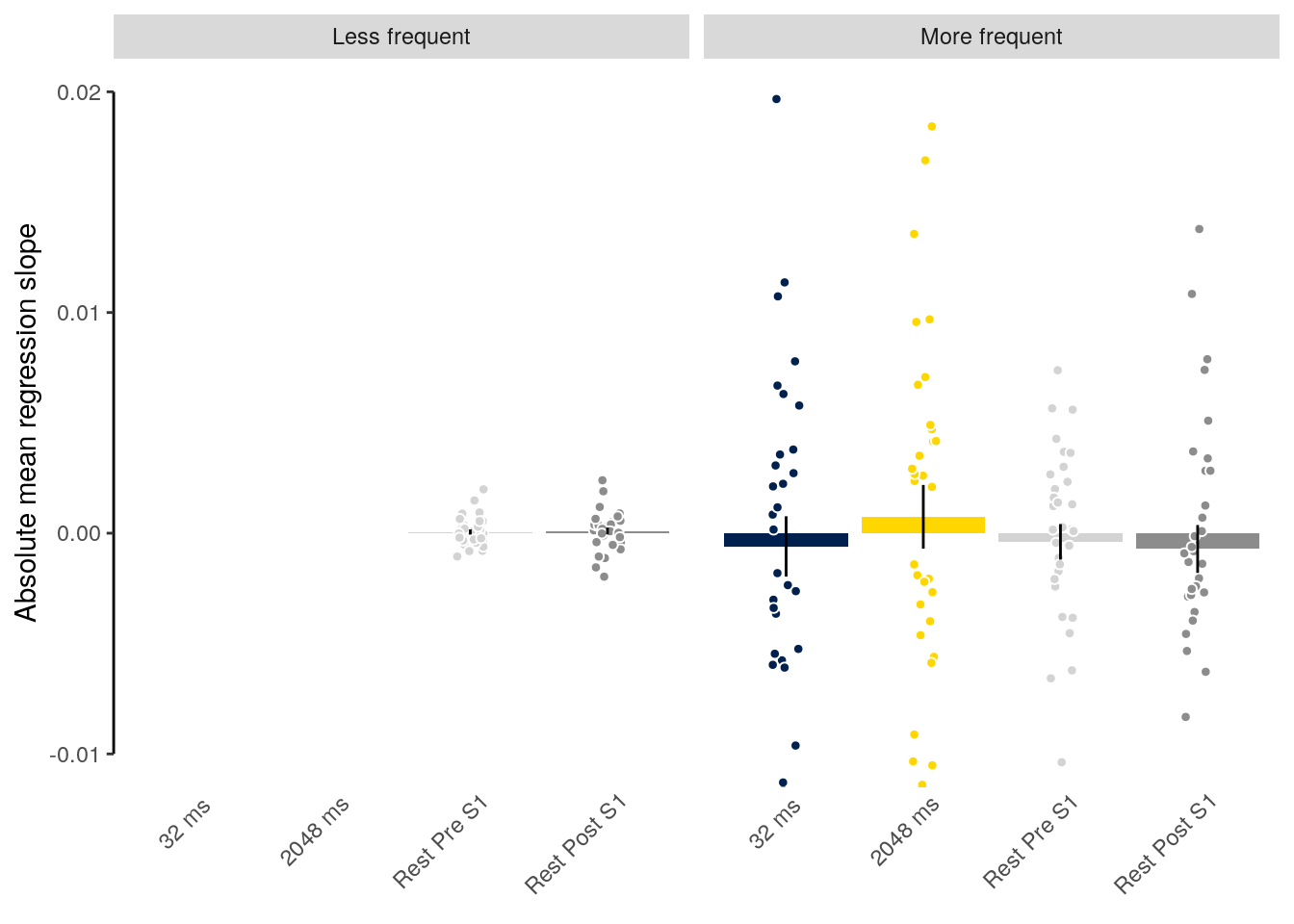

panel.grid.minor = element_blank(),